In Central-Eastern Europe, a nation is building one of the continent’s most ambitious artificial intelligence infrastructures. Ukraine’s recent partnership with NVIDIA to construct what they’re calling a “sovereign AI state” represents a convergence of digital independence principles and technological ambition that deserves close attention from European neighbors and technology leaders globally.

In this article you will discover:

- How Ukraine is building world-class AI supercomputing infrastructure

- The four strategic pillars of the Ukraine-NVIDIA partnership

- The critical role of vendor-neutral networking in sovereign infrastructure

When Digital Infrastructure Becomes Strategic Priority

Ukraine’s digital transformation over the past few years has been remarkable. The Diia app, which lets citizens access government services, store digital IDs, and sign legal documents, seemed revolutionary when it launched. But what Ukraine is doing now represents the next evolution.

In November 2025, Ukraine’s First Deputy Prime Minister and Minister of Digital Transformation Mykhailo Fedorov announced a comprehensive partnership with NVIDIA to build essential sovereigninfrastructure and emphasized that building sovereign AI was a matter of national security and data protection, especially in wartime conditions.

The strategic importance is clear: as nations increasingly recognize AI and open networking as criticalinfrastructure, Ukraine is investing in indigenous capabilities and operational independence.

Learn more about SONiC's architecture, its capabilities, and explore inspiring success stories of SONiC deployments across diverse network environments.

The Four Pillars: Comprehensive Infrastructure Development

What makes this partnership distinctive and what differentiates it from typical government IT contracts is its comprehensive scope. Ukraine is building an entire ecosystem around four strategic pillars:

1. National AI Infrastructure

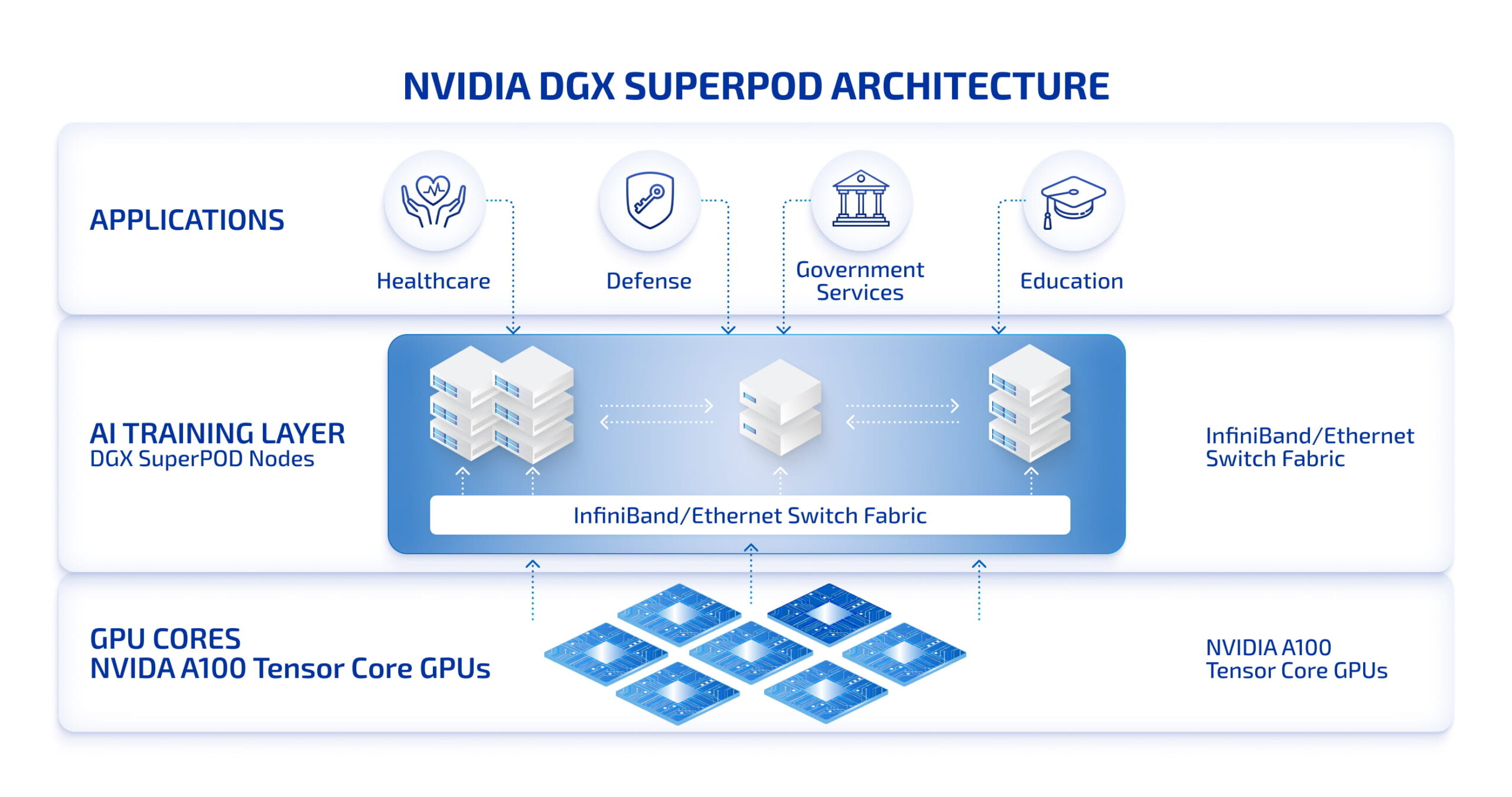

At the heart of this initiative is the “AI Factory,” a supercomputing environment built on NVIDIA’s powerful hardware – the DGX SuperPOD systems, capable of scaling to tens of thousands of GPUs. These are enterprise-grade platforms used to train trillion-parameter AI models that power everything from advanced language models to cutting-edge medical research.

The timing is strategic. Ukrainian private cloud providers have already invested over $2.5 million USD in NVIDIA’s H200, H100, and L40S GPUs. The government is now creating a standardized platform that the entire tech ecosystem can leverage.

2. Talent Development

The partnership includes structured workshops, architectural review sessions with NVIDIA experts, and AI education programs. It’s a masterclass in technology transfer – internalizing the knowledge to build and maintain it independently. This addresses a critical challenge: building domestic AI expertise at scale while competing with higher-paying opportunities abroad.

3. Joint Research & Development

Ukraine and NVIDIA are collaborating on custom AI models adapted to Ukrainian legislation, language, and specific governance needs. The complexity is substantial: training an AI that understands Ukrainian administrative law, can process requests in Ukrainian (with all its linguistic nuances), and operates within the country’s legal framework requires far more than off-the-shelf solutions.

4. Startup Ecosystem Support

The commitment to support Ukraine’s AI startup scene is particularly strategic. By providing essential resources, the government is subsidizing innovation. Local companies can develop tech solutions, healthcare applications, and commercial products using world-class computing power, democratizing access to expensive infrastructure.

From Gemini to Sovereign: The Diia AI Architecture Evolution

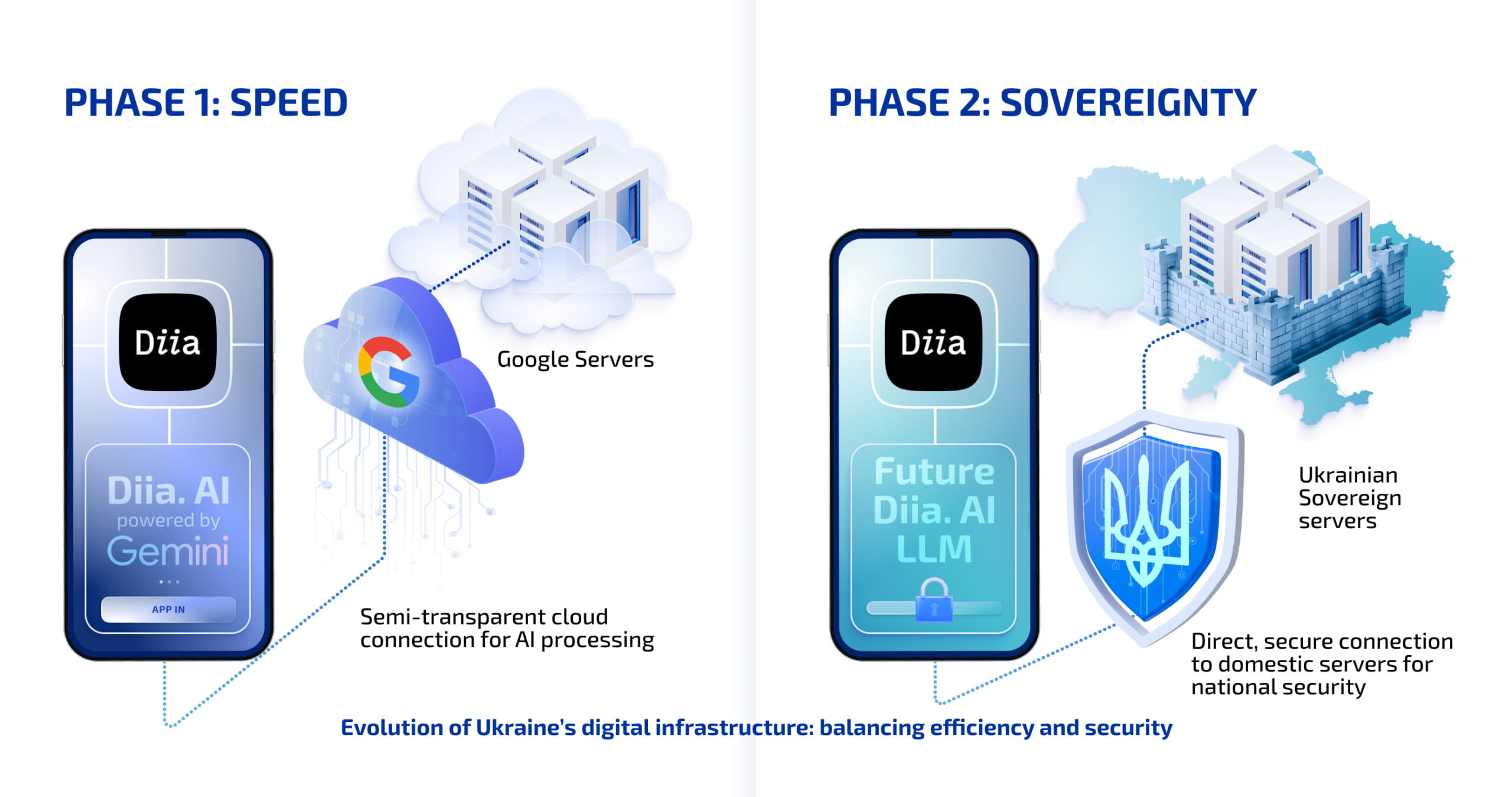

In September 2025, Ukraine launched Diia.AI, which officials called the world’s first AI assistantintegrated into a national public services system. The platform answers questions about taxes, permits, and social services in real-time, providing genuinely impressive functionality.

The initial implementation runs on Google’s Gemini model – an excellent choice for rapid deployment and proof-of-concept validation.

However, for long-term digital sovereignty, relying on an external AI model for government services creates strategic dependencies. Questions around model integrity, data governance, and operational continuity drive the need for indigenous solutions.

This is why the centerpiece of the NVIDIA partnership is developing the Diia AI LLM – a sovereign large language model specifically adapted to Ukrainian legislation, public services, and citizens’ needs. It’s a strategic transition from rapid deployment to sustainable sovereignty.

The Road Ahead: Technical and Strategic Challenges

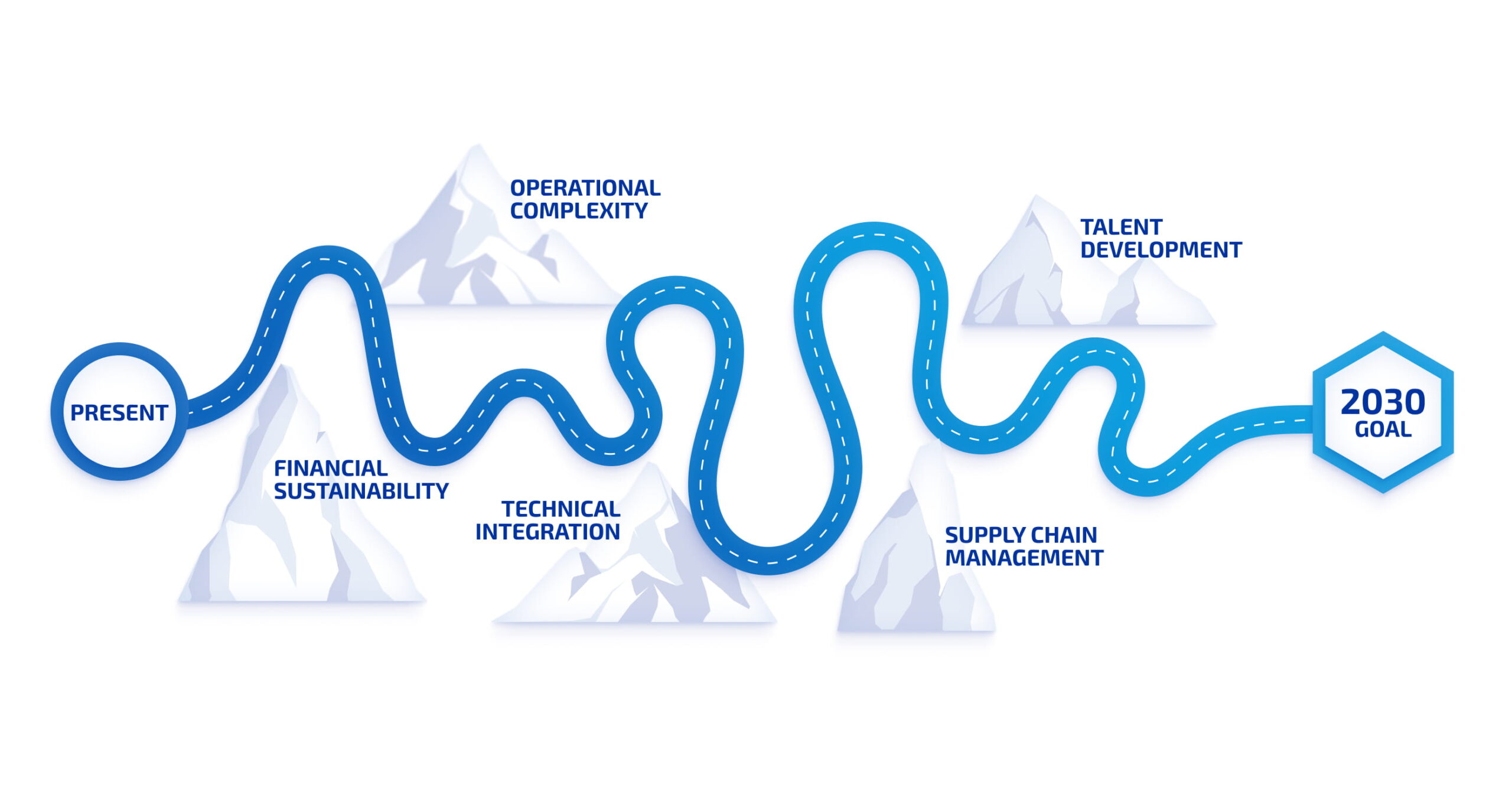

Building an AI supercomputing infrastructure on a national scale also presents some challenges. And maintaining it to enterprise-grade standards demands a sustained commitment.

Financial sustainability is the most obvious concern. High-performance NVIDIA systems require massive capital expenditure – for initial purchase and ongoing maintenance, power, cooling, and regular upgrades. Ukraine will need sustained investment, potentially blending national budget allocations, international donor funding (like the €250,000 from Estonia’s ESTDEV for strategy development), and creative public-private partnerships.

Operational excellence also presents unique technical challenges. The AI Factory becomes critical national infrastructure requiring enterprise-grade reliability. This demands geographic dispersion,

aggressive redundancy planning, backup power generation, and operational protocols that exceed typical data center standards.

Vendor management is a strategic consideration. While NVIDIA provides world-class technology, dependency on any single supplier creates vulnerabilities. What happens if prices spike? If new hardware faces supply delays? If geopolitical situations shift? Ukraine needs to balance the immediate benefits of this partnership with long-term supply chain resilience – a challenge that extends to every layer of the infrastructure stack, from compute to networking to storage.

The Networking Foundation: Beyond AI Hardware

While NVIDIA provides Ukraine’s AI “intelligence” layer, the networking fabric under it is equally critical. True sovereignty means controlling every layer – including the network operating systems that connect data centers, manage traffic, and ensure resilience. A sovereign AI on sovereign hardware is vulnerable if it depends on proprietary networking equipment or software.

This same logic is now shaping Europe’s digital sovereignty. To reduce dependency and regain control over critical infrastructure layers, the EU is turning to open-source networking solutions. PLVision, a leader in open disaggregated networking, exemplifies this approach through its work with the SONiC (Software for Open Networking in the Cloud) and DENT projects.

Considering a switch to open-source networking? Explore the reasons to choose an open-source NOS like SONiC, along with a breakdown of the Total Cost of Ownership (TCO) for both proprietary and open-source solutions.

Just as Ukraine is transitioning from Google’s Gemini to its own Diia AI LLM, organizations are moving from proprietary network operating systems to open alternatives that offer vendor neutrality, cost optimization, full control over network infrastructure, and community-driven innovation.

PLVision blends deep switch-software engineering with active community leadership. Our offerings include:

- Custom product development based on open-source technologies, SONiC-based product development services for Telco use cases, and SwitchDev development.

- Open NOS enablement and customization for hardware, encompassing SONiC-DASH API implementation, porting Community SONiC to your hardware, offloading xPU/SmartNIC customization and support.

- Network infrastructure transition to open NOS, such as SONiC adoption for enterprise networks and generating Community SONiC hardened images for data centers.

- SONiC Lite, PLVision’s enterprise distribution of Community SONiC, designed for cost-effective management and access platforms. It’s ideal for less demanding networking equipment in data center, campus, and edge deployments.

By owning your custom SONiC version, you gain full control, eliminate vendor lock-in and licensing fees, and align your infrastructure with modern demands.

Conclusions

Ukraine has defined sovereignty practically: state-controlled infrastructure, domestically managed data pipelines, and nationally adapted AI models.

For Ukraine’s neighbors, partners, and allies across Europe, this initiative offers lessons in digital sovereignty, opportunities for technological collaboration, and a reminder that innovation often emerges from strategic necessity and real-world constraints.

Concretely, an open-source NOS such as SONiC enables organizations to implement controlled infrastructure, manage data pipelines locally, and customize networking for various workloads.

Ready to explore how open-source solutions can enhance your business?

- Building Sovereign AI: Ukraine’s Comprehensive Infrastructure Initiative - January 16, 2026