Leveraging our experience in the early exploration and development of the emerging SONiC-DASH networking technology, we have created this blog post series to highlight the key aspects of building a DASH-based solution. Part 3 of our series describes DASH’s API testing for a DPU platform.

Securing a DASH-based solution's quality

SONiC-DASH, an emerging open networking technology introduced by Microsoft and developed as a spin-off of the SONiC project [1] , lays the foundation for new solutions based on programmable hardware like DPU or SmartNIC to meet essential enterprise needs. The DASH control plane software inherits SONiC’s architecture and key components, which helps significantly streamline the adoption process and provide substantially increased flexibility, scalability, and other benefits.

In Part 2 of our SONiC-DASH series, we discussed the four key steps required for DASH integration with a new DPU based on PLVision’s hands-on experience developing this type of solution. To help you make sure your DASH-enabled product fulfills your quality expectations, we will spend this blog post diving into the particulars of DASH testing.

While DASH is a growing open project with many contributors, its community testing tools are in the early stages of development. For example, DASH introduced a set of new overlay APIs at the SAI level with no functional and non-functional test coverage available. Since its testing required significant efforts, this pushed us to reconsider our approach and look for efficient methods and frameworks. Therefore, we will also explore the use of SAI Challenger, a unique solution for testing of DASH API, initially developed by PLVision, contributed to the Open Compute Project (OCP), and adopted as a community testing framework in the DASH project.

The complexity of SONiC-DASH testing: beyond traditional methodologies

Traditionally, in the SONiC domain, the sonic-mgmt test infrastructure and SAI PTF test framework are the tools of choice for quality assurance. While the state-of-the-art SONiC testing tools can be applied to enable test coverage for a DASH-based solution, they are insufficient in terms of complete scope of testing. The DASH project introduced new APIs and use cases beyond regular switching and routing. Therefore, the testing approach needed rethinking to address the increased complexity.

DASH’s documentation [2] contains comprehensive information on use cases, reference test bed structure, DASH API definitions, scaling and performance requirements, configuration examples, and references to recommended test frameworks.

SONiC-mgmt is a good option for system or end-to-end feature testing with the broadest coverage included. Still, it requires the product to run the entire SONiC-DASH control plane infrastructure (available at the later stages of DASH integration). At the early stages, when only libsai implementation is available, the SAI-PTF framework is a common testing approach.

Both frameworks use PTF, a scapy-based software traffic generator, whose major limitation is not meeting scaling and performance requirements of DASH. At the same time, neither of the frameworks provides coverage for new DASH APIs and scenarios. The most important gaps in the available test tools are:

- Coverage for DASH-specific scenarios (overlay APIs, new VNET use cases, etc.)

- Support of DASH-specific setups/topologies (number of ports, neighbor types, and configuration)

- Availability of scaling/performance testing

- Capability to harness target platforms at different API levels (SAI, gNMI, custom APIs, etc.)

To close these gaps, new test scenarios are being developed by the DASH community, with a particular focus on scaling and performance, since it’s one of the reasons to include DASH in the DC infrastructure. This led to a new flexible solution developed through a collaboration by PLVision and Keysight Technologies based on SAI Challenger, which was adopted by the DASH community.

Testing a DASH-based solution: an incremental approach

The DASH design documentation contains test automation framework abstract [3] definitions and test framework maturity stages [4] . Their main goal is to support quality assurance for an evolving DASH-based product. Following these guidelines, we will focus on testing a use case along the DASH integration stages defined in the previous post of our series: VNET-to-VNET. This allows us to prove the approach because it is based on new SONiC-DASH features and underlay/overlay SAI API.

The first stage is quite challenging, since we don’t have SAI in place yet and we need to validate the DASH scenario for DPU. This illustrates the gap mentioned above – the test tool must be API-agnostic and allow the use of a “custom API,” e.g., a vendor SDK for DPU in our case. At this stage, there is no out-of-the-box solution available, but the community now offers an effective tool – SAI Challenger [5] , which allows vendors to define a custom driver for their SDK and validate a scenario in very early stages.

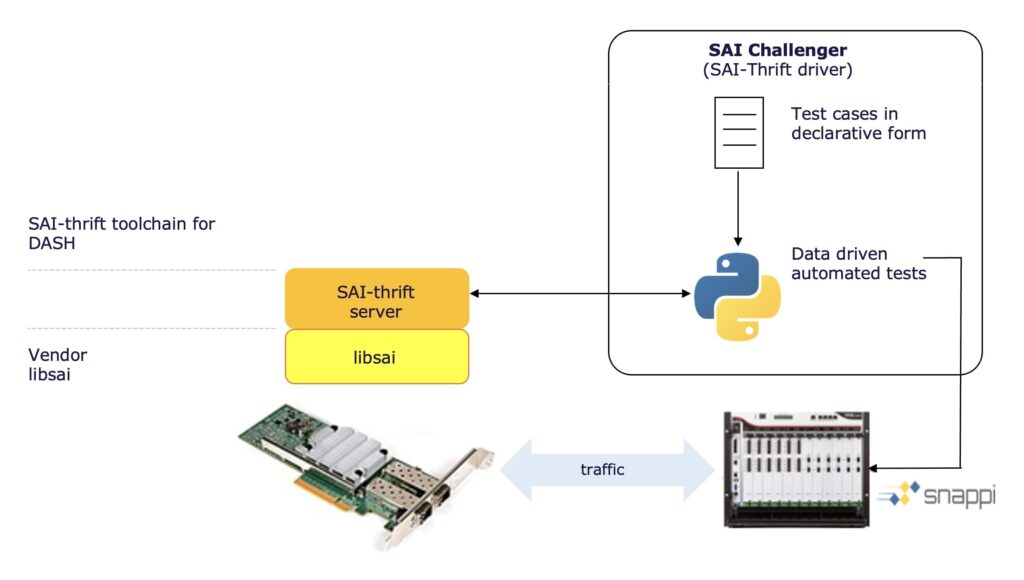

The integration stage of SAI library development connects to the second stage of DASH testing. At this stage, interface to hardware (SAI) and fundamental DPU capabilities are already available. This enables us to perform scaling and performance testing in addition to existing functional tests.

A hardware traffic generator is a must for such a type of testing. A vendor-agnostic, open, model-driven traffic generator API snappi [6] that enables development teams to utilize HW traffic generators like IxNetwork has made great progress in DASH testing. The auto-generated python SDK snappi, designed by Keysight, can be executed against any traffic generator conforming to an Open Traffic Generator API (currently most supported are Ixia-C and IxNetwork). It allows testing the performance and scale based on the DASH requirements (like CPS, rules, and mappings count), while opening a new horizon for testing DASH on hardware.

In partnership with Keysight Technologies, PLVision added support for snappi to SAI Challenger, allowing users to execute the same test case in different environments starting from software simulation all the way up to hardware-based high-speed line-rate traffic generators. Tests use SAI-Thrift as an API, which is also integrated with SAI Challenger.

Figure 1. Stage #2: Device configuration using thrift server.

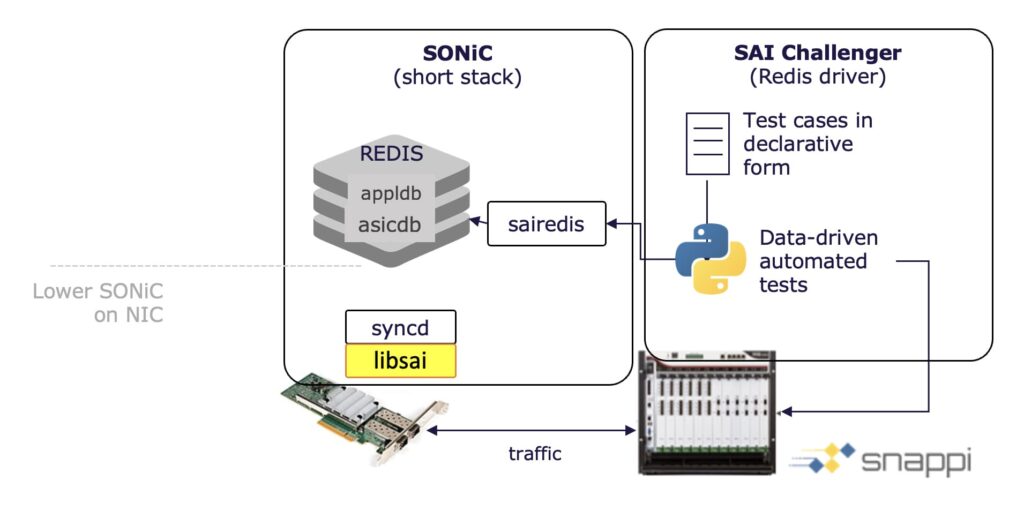

The third testing stage is about integration with syncd and redisDB, in which we get the so-called “short stack” implemented: libsai wrapped by syncd and the SAI state represented in Redis DB. At this stage, again, we can use SAI Challenger to interface Redis DB records and emulate control plane operations (i.e., model protocols and user behavior). It is possible to use both software and hardware traffic generators, allowing you to do more than just functional tests, but also measure performance and stress test the DPU. Complex topologies spanning multiple DPUs allow us to model portions of a network and test advanced scenarios.

Figure 2. Stage #3: Device configuration using Redis DB.

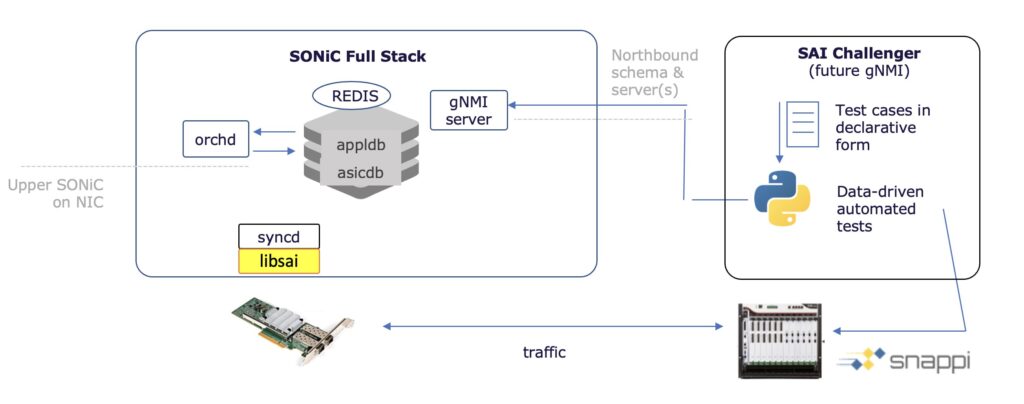

Finally, before the fourth stage, we have SWSS, all components, and gNMI/gRPC integrated, so it is a good time to recall the sonic-mgmt framework and adapt it for DASH purposes. Firstly, it must be extended with DASH-specific topologies to resemble the network environment your DPU is operating in. At this stage, we test NOS at the system level and establish E2E coverage. The good news is that the DASH VNET-to-VNET scenario for sonic-mgmt is already in review. Therefore, we are expecting to have more real-world SONiC-DASH scenarios available soon.

Figure 3. Stage #4: Device configuration using gNMI (or other API)

Overall, there are plenty of tools and approaches in DASH for testing. Importantly, these tools support incremental DASH enablement for a new DPU platform. Test types and coverage can be increased while DASH integration steps are being taken.

Conclusions

Even though DASH control plane software reuses the established SONiC architecture, tooling, and environment, its testing can be challenging and is not fully covered by existing methodologies. It involves complex topologies, testing of different API levels, software stacks, and hardware platforms. Overall, working with DASH demands strong expertise in network operating systems and system-level understanding of data-center networking.

PLVision’s experience in disaggregated networking software systems (SONiC, SAI, DentOS) enabled us to explore DASH as soon as it emerged and to start developing DASH-based solutions. Through our partnership with Keysight, we’ve also helped speed up DASH testing by enhancing the application of SAI Challenger, developed by PLVision and contributed to the Open Compute Project (OCP).

The goal of SONiC-DASH is to significantly extend SONiC functionality, including stateful workloads, while reducing energy consumption and simplifying network management. Although the project is still in its early stages, its trajectory is clear, as DASH is being actively developed by the community and providing an innovative way to increase the performance of cloud services with DPU and SmartNIC.