In this article, we will share ready-to-use, practical and powerful open-source tools that can be used with both emulated and real equipment to start Linux networking test automation. We have extended the Mininet-based FRR test framework written on Python to be applicable for testing physical equipment that runs the Linux-based OS, such as the freshly released Switchdev-based DENT OS or the popular SONiC. And, as a bonus, we will provide examples of how to send and check network traffic in this environment.

Introduction: Testing Linux Networking

FRRouting (FRR) is a routing protocol suite for Linux environments. Rooted in Quagga (Zebra) open-source implementation of the most crucial L3 protocols, FRR was the stack of choice to enable layer 3 connectivity for data center workloads. It is widely used to enable virtualized (server-side) networking, connecting hosts, virtual machines, and containers to a data center network. This includes building overlayed L3 networks, advertising network service endpoints and Internet access/peering routers.

Along with its strong footprint on server-side networking, FRR became a chosen L3 protocol stack for physical routers over the last decade. It is used both by proprietary and open-source NOSes and contains implementation of BGP, OSPF, EVPN and many other routing protocols. Although the list of these open-source NOSes is quite long, among the most prominent FRR adopters are SONiC, OPX (OpenSwitch) and DENT OS.

Testing an open-source network operating system (NOS) usually translates into testing something on Linux and, preferably, in a quick and easy way. To avoid spending excess time setting up frameworks, it is best to use open-source tools. Also, since physical testbeds are expensive and target boards are limited in number, you might consider saving precious resources by running test scripts in an emulated environment before running them without change on a physical device. Things can go bad quickly when one of the requirements is to set up a large topology of multiple hosts – you may lack enough boards to build such a setup.

The general idea behind our testing solution was to avoid reinventing the wheel. Many existing frameworks look like they have everything you need, “except for…” Have you heard this? “Except for” aspects can easily become an additional project requiring a separate budget.

Usually, testing networking features requires you to have network topology and the ability to send control and/or data traffic. But there are several open-source tools that can help you resolve this need. We chose FRRouting Topotest because of its simplicity.

Topotests was developed by the community to test FRR products. It is well suited for building virtual topologies and validating CLI and control traffic. Topotests combines two of the best open-source tools: Pytest and Mininet. Pytest is the most efficient and mature test framework on Python, and Mininet is the most popular tool to emulate network topologies.

But what about real equipment? This is why we created our solution. When switching from emulation to testing on equipment, an engineer won’t need to make any changes in tests or the test framework. Moreover, we can have a fully virtual topology with multiple hosts and replace one of the mock devices with a real one. This approach, by the way, is similar to something the SONiC community testbed can do. But, compared to SONiC’s testbed, Topotests are much more lightweight, have fewer resource requirements, are easier to maintain, and can run on VM and various Linux distributions.

Read our recent blog post: “Revolutionizing Open Networks: Exploring the Power of SONiC NOS”

It is worth mentioning that Python is the preferred language for the FRR test suite. So, if your test development language is Python, then you are definitely safe.

How to begin using our solution

First, you need to download the original FRRouting code. You can use the official GitHub repository or our fork. We recommend using our fork so it will be easier for you to get the modified code later on.

Next, we will start running the “vanilla” test and then will add the missing parts needed to run the suite against a physical switch. All FRR tests are expected to have FRR installed on the test server. This installation will be used for running services inside virtual router instances.

Follow these steps to install FRR and test dependencies:

- Since our future changes are based on stable version 7.5, start with “git checkout frr-7.5”.

- Next, install FRR – Building FRR for Ubuntu 18.04 . Follow the guide until you reach the MPLS section. From MPLS down, all further sections are optional. You can find manuals for other Linux distros in the FRR documents, but it’s recommended to start from Ubuntu 18.04.

- Install tests dependencies – Topotests installation and setup (Note that this guide is written for Ubuntu 18.04 and Python2!). You only need to follow Mininet Infrastructure installation guidelines. The rest is optional.

Yes – so far, it’s Python2. However, the code migration to Python 3 is done by the community. The docs are not updated yet, so for the sake of simplicity, we will keep it that way for now. If you are ready to translate the steps to Python 3 while debugging issues along the way, go ahead!:) But we have one helpful hint for you – use the Pytest version 4.6.11 and install Mininet from the PyPI, not the Ubuntu repository.

Now we are ready to launch a test on the fully virtual topology for which it is designed. We should do this prior to any modifications in the original code to ensure we have fulfilled all dependencies and the test suite is fully functional.

cd frr/tests/topotests/bgp_reject_as_sets

sudo python test_bgp_reject_as_sets.py -k test_bgp_reject_as_sets

The test should pass.

How to add physical equipment

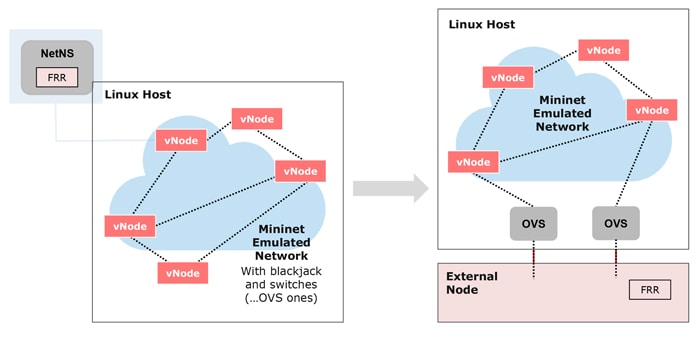

Let’s replace one router in the virtual topology with a physical device. The scheme below demonstrates how our setup will change.

A single Linux server is sufficient to execute the existing FRRouting Topotest suite. It spins off multiple FRR instances enclosed in Linux namespaces, then connects them in a test-specific topology using Mininet’s capabilities. The last step is to run packets through the virtual network to verify the proper behavior of the routing protocol stack.

Now, our goal is to attach several test server ports to the Mininet topology before attaching cable DUT ports to the server’s NIC. This way, we are extending the virtual test topology to include a physical router.

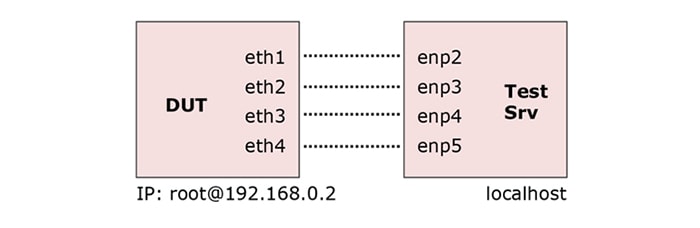

The following port names and addresses are used in config examples:

We can also use VM as a DUT. In fact, it doesn’t matter what you use – a Linux VM is as good as a physical device. All you need is SSH access and proper Linux network interfaces on the test server connected to DUT.

Our solution can be applied “as is” for testing a device running FRR as a native Linux service without any additional abstractions. For testing routing on DENT OS (or any other flavor of ONL), you won’t need to make any changes, this is a ready-to-go solution. For SONiC, you will need to adapt it since FRR is running in a docker and all commands should be translated inside of the BGP container. If you need help, there are many examples on the Internet on how to use Mininet with Docker containers.

When it comes to links, the number needed is dictated by the test you are going to run. In most cases, FRR tests require no more than 3 links. However, you should check each test in detail since each one uses its own virtual topology and may use 4 or more links.

Now, to make this topology functional, let’s look at a branch with hardware router support. This is where the magic happens – spanning the Mininet topology outside the test server.

git checkout with_hw_router_2

Note: the checkout is possible if you made the clone from PLVision’s repository. Otherwise, you will need to add an additional remote as the changes are not in the upstream yet.

To summarize, the code in the branch adds three aspects to the existing code:

1. Support of the remote Node (RemoteRouterMixin class). By default, Mininet created Linux Network Namespaces to emulate network nodes. And, to execute commands inside of these Namespaces, it uses the standard CLI – “ip netns exec.” The patch is adding a new class of Nodes that uses SSH to run any commands.

2. Possibility to connect external network interfaces (NIC ports, or VM’s tap interfaces) to Mininet’s internal OVS switches (RemoteLink class). If compared to connections between Network Namespaces, we do not need to create and delete a veth pair for linking a node to the topology. One end of the link is already inside the node (no need to set the destination network namespace). The other end of the link needs to be added to OVS (this part is unchanged).

3. Also, the patch contains small amendments to the topogen.py module, allowing the user to select which router needs to be replaced and how to copy configuration files to the remote node (scp instead of the regular cp).

Additional setup description (links and IP of DUT) should be added to tests/topotests/pytest.ini. Append the file with your setup info.

Example:

r_router = r1

r_server = 192.168.0.2

r_user = root

r_keyFile = /root/.ssh/id_rsa

r_sshPort = 22

r_links_num = 4

r_link_0 = enp2,eth1

r_link_1 = enp3,eth2

r_link_2 = enp4,eth3

r_link_3 = enp5,eth4

Please note the ‘r_router’ option here. It should correlate with the name of the topology’s router, which must be replaced with an external device. Since different tests may have different router names in the topology, this can be re-defined using the CLI option –hw-router=NAME. Usually, the topology definition is the first class inherited from Topo in each test file.

Now that you have your device, it’s time to run the test. This one requires two links.

cd frr/tests/topotests/bgp_reject_as_sets

sudo python test_bgp_reject_as_sets.py -k test_bgp_reject_as_sets --hw-router=r2

Also, try “bgp-ecmp-topo1” with “r1” router replaced. This is a good example of a test as it contains the picture of the topology in the test folder. It should be pointed out that this test requires four links. By the way, the frr-7.5 branch version of this test does not support Python 3 while the previous test can be run on both Python 2 and Python3.

Please note we are replacing router r2 here since the test will fail with default r1. The reason is – the real device has a management IP that we are using for SSH and this connected network will be populated by BGP. This is unexpected in test steps for r1. However, this is not verified for r2:)

If you want to continue running tests on the emulated environment, you need to set a non-existing router name, e.g. –hw-router=asdf. This can also be set up by default in pytest.ini to a non-existing value.

That’s it! We have the tests and the test framework, allowing you to run testing on both virtual and physical equipment without needing to make any changes.

How to debug your test

Sometimes, things go smoothly and sometimes the unexpected happens. There is a high probability you will need to debug your code, so let’s discuss how.

First, try to increase the verbosity level in test output with adding “-s -v” options to the test run command. This may be all you need to do. If it’s not enough, follow the guidelines below.

You can always use a standard Python debugger. Pytest allows you to activate this tool with a single line in the code: pytest.set_trace(). Another option is to troubleshoot the emulated topology using Mininet CLI. To get a command-line prompt, add CLI(net) to your test (refer to the topotests folder to see examples of how this works).

But what if you need to debug the setup itself? Perhaps it’s not built correctly, or there is a link missing between the nodes. In this case, follow these steps:

1. Set log level to debug: setLogLevel(‘debug’) – this is usually placed at the bottom of test files.

2. Find out NetNS PIDs. Debug log contains lines as follow:

moving h1-eth0 into namespace for h1

*** h1 : ('ifconfig', 'h1-eth0', 'up')

(s1, h1) *** s1 : ('ip link add name s1-eth0 address ea:76:bf:1e:bd:9b type veth peer name r1-eth0 address ba:cb:92:21:57:8d netns 29003',)

3. Now, let’s connect to netns:

mnexec -a PID COMMAND

mnexec -a 29003 bash

4. From here, do whatever it takes to connect everything: ping, tcpdump, rm -rf / …

Also, you might be interested in how OVS instances are interconnected (Open vSwitch is used to bring up those complex virtual topologies). Look at the output of “ovs-vsctl show.” Each peer-to-peer link is using a separate switch. Therefore, it won’t be hard to identify all connections. And do not forget to use the debug log as a reference for all interface, switch, and node names. Happy debugging!

How to run data traffic through the setup

The FRRouting Topotest suite is primarily focused on test scenarios for routing signaling protocols (BGP, OSPF, IS-IS, etc.). When testing the hardware forwarding plane, be sure the FIBs are configured in the hardware and the data traffic traverses the network in a proper way. Let’s look at some simple tools to generate and capture packets.

Mininet already has ping and iperf support out-of-the-box and both tools are usually installed by default in most Linux distributions. This functionality is enough for 99% of cases. Simply call tgen.net.ping() or tgen.net.iperf(). Mininet allows you to easily add the support of your own tools like iperf3 or nc (netcat) so you can have additional options like JSON output. Take a look at the iperf and iperf3 man pages for additional options and more details on how they are different. You can also refer to Mininet manuals for more examples, ping flavors, and how to use them in Python.

On a side note, having a Linux environment in this testing approach is a great advantage as it allows you to use any user space applications you would like. Iperf or ping here are only the top of the iceberg. For example, you can establish client-server connections by using HTTP server one-liner and wget, or curl as a HTTP client.

If you need to send arbitrary traffic, or the iperf in Mininet doesn’t satisfy your requirements, check out simpleCLI traffic generator mausezahn. This tool is also used by Linux Kernel selftests and is available in standard Debian/Ubuntu repositories. In this case, capturing could be done either by using tcpdump or by adding software counters using tc tools.

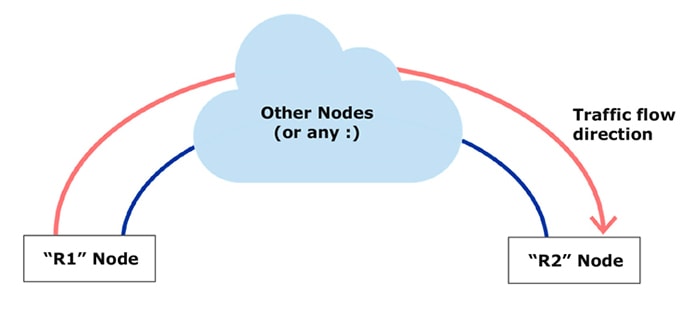

Let’s use the following topology as an example:

R1 Node will play the role of traffic sender and R2 Node will receive and analyze the traffic.

Sending IP traffic with mausezahn looks like this:

iface_name_to_r2 = “r1-eth0” # In case we use emulated environment. Otherwise it’s better to read interface names from tgen.net['r1'].intfList()

mac = “00:11:22:33:44:55”

ip = “10.0.0.1”

tgen.gears[‘r1’].run(“mz {iface} -c 1 -p 64 -b {dst_mac} -B {dst_ip} -t ip -q”.format(iface=iface_name_to_r2, dst_mac=mac, dst_ip=ip))

NOTE: By default, source MAC and IP are taken from iface “iface_name_to_r2.”

Check out mausezahn man pages. This tool allows you to send various types of traffic. You can also find usage examples in Linux selftests (link1, link2).

Now, let’s try to capture your traffic. Capturing traffic is what you want to do if you only need to see traffic stats and not analyze packet content. Of course, for the sake of precision, you should create a filter to ensure you only count the desired stream. Here is how you can create filters to track the traffic from the example above using tc on R2 Node:

tgen.gears[‘r2’].run(“tc qdisc add dev {iface} ingress”.format(iface=iface_name_to_r1))

tgen.gears[‘r2’].run(“tc filter add dev {iface} ingress protocol ip pref 1 handle 101 flower dst_mac {mac} action pass”.format(iface=iface_name_to_r1, mac=mac))

And here is how you can check stats after sending the traffic:

stats = tgen.gears[‘r2’].run(‘tc -j -s filter show dev {iface} ingress | jq -e ".[] | select(.options.handle == 101) | select(.options.actions[0].stats.packets == 1)" &> /dev/null’.format(iface=iface_name_to_r1))

In the example above, we used bash oneliners from the Linux selftests. However, you can change it in any way you want. “tc” has the option to return JSON, and you can parse it directly in Python instead of using the CLI ‘jq’ tool.

The next example with tcpdump is much simpler but less flexible.

Start tcpdump in the background:

tgen.gears[‘r2’].run('tcpdump -G 5 -W 1 -q -c 1 -i {iface} -w capture.pcap dst {mac} &'.format(iface=iface_name_to_r1, mac=mac))

Send traffic and/or do any other stuff in your code, then double check what has been captured:

capture = tgen.gears[‘r2’].run('tcpdump -r capture.pcap -nve')

For functional testing, this might be more than enough. Still, there are multiple ways to improve it – check out the last section for ideas.

Summary

- One option is to adapt the existing test suite for routing stack to be executed against physical equipment (switches/routers) – by adding additional out-of-the-box test content for routing stack in SONiC, DENT OS, and other NOSes.

- You can still use the virtualized environment to develop new test cases and troubleshoot the existing ones. A single PC running Linux is sufficient.

- It is possible to perform emulation of complex topologies and put a DUT in different, complex use cases using a single PC – no need to overuse or overbook the usually limited hardware resources.

- A wide spectrum of running traffic, test and debug tools for networking is available out of the box – these are regular Linux utilities.

What's next?

This approach has plenty of room for improvement but it depends on your need. In our case, we are ready to go into production:) If you are looking for something more, here are a few ideas.

You can add an option to attach multiple physical devices to the test topology. With the current implementation, you can do this only by “hardcoding” the real devices in the topology class inside of the test case, which is very helpful in some cases. Not every test framework supports building and managing a multi-device topology out of the box. This can be done with the provided patch by converting a single “r_router” in setup to a list of routers.

Currently, all nodes are running FRR. On one hand, you are not obligated to configure it. However, the test will look for its binaries. Therefore, you can always disable running FRR daemons, adjust mount folders, add your own profile and, finally, add your own node class that fits your specific needs.

If you are looking for the most flexibility and a more pythonic way in traffic generation, it’s always possible to add a new Node type in Mininet to replace FRR services. You can run TRex or Ostinato inside of the new node or run python RPC for using pypacker or scapy. Afterward, you won’t need any “bash” code in your tests – but that’s another story:)

Last but not least, we invite you to investigate the Mininet example folder in the official GitHub repository. It’s full of great ideas for Mininet applications.

And don’t forget, the main idea of Topotests is to build topology and provide access to virtual or real nodes in the network. However, you can be creative in using it for your specific needs so go ahead and get started!