As a follow-up to our post about traffic steering prototype, I’ve decided to draw your attention to its key component – DPDK-accelerated NGINX, which functions as an NFV node. In our comparative analysis of DPDK-NGINX vs original NGNIX, we have found that the first shows almost 3 times better performance, even on virtual machine with virtual DPDK adapter.

The key element of PLVision’s OpenDaylight-based traffic steering prototype is DPDK-accelerated NGINX Load Balancer, which is responsible for balancing HTTP traffic between HTTP Clients and Web Server Pool. Below you will find detailed results of DPDK-accelerated NGINX performance testing in comparison with non-accelerated NGINX, as well as an overview of the technologies in question.

DPDK-accelerated NGINX Load Balancer

DPDK-NGINX is a fork of official NGINX-1.9.5, which is a free open-source high-performance HTTP server and reverse proxy, as well as an IMAP/POP3 proxy server. NGINX is known for its high performance, stability, rich feature set, simple configuration, and low resource consumption. All features of NGINX are fully presented in DPDK-NGINX. What we needed for our prototype is to use DPDK-NGINX as a proxy server – HTTP clients’ requests are redirected to a specific web server from the pool, and then the server’s response is passed back to HTTP client. In order to implement such functionality, we had to introduce some fixes in DPDK-NGINX, particularly in the “dpdk-nginx/src/event/modules/opendp_module.c” file. We corrected implementation of the following functions:

Note: We did not attempt to upload our updates to DPDK-NGINX GitHub, since we had informed the author about these issues and they were fixed shortly afterwards.

int getsockopt(int sockfd, int level, int optname, void *optval, socklen_t* optlen)

{

if (sockfd > ODP_FD_BASE)

{

sockfd -= ODP_FD_BASE;

return netdpsock_getsockopt(sockfd, level, optname, optval, optlen);

} else

{

return real_getsockopt(sockfd, level, optname, optval, optlen);

}

}

int connect(int sockfd, const struct sockaddr *addr, socklen_t addrlen)

{

ODP_FD_DEBUG("fd(%d) start to connect \n", sockfd);

if (sockfd > ODP_FD_BASE)

{

sockfd -= ODP_FD_BASE;

return netdpsock_connect(sockfd, addr, addrlen);

}

else

{

return real_connect(sockfd, addr, addrlen);

}

}

Introducing the Accelerated Network Stack

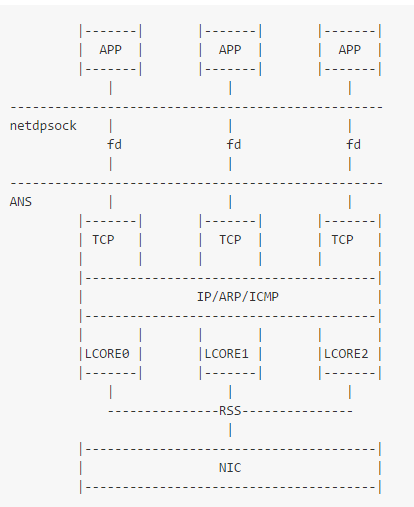

The principal difference between DPDK-NGINX and NGINX is DPDK-accelerated TCP/IP stack (Accelerated Network Stack – ANS), which actually enables integration of Intel DPDK and NGINX. Here’s an ANS block diagram using TCP traffic as an example to illustrate how it works:  Source – https://github.com/ansyun/dpdk-ans

Source – https://github.com/ansyun/dpdk-ans

- NIC distributes packets to different lcore based on RSS, so same TCP flow are handled in the same lcore.

- Each lcore has own TCP stack, so no shared data between lcores, free lock.

- IP/ARP/ICMP are shared between lcores.

- APP process runs as a tcp server.

- APP process runs as a tcp client, app process can communicate with each lcore. The tcp connection can be located in specified lcore automatically.

- APP process can bind the same port if enable reuseport, APP process could accept tcp connection by round robin.

- If NIC doesn’t support multi queue or RSS, shall enhance ans_main.c, reserve one lcore to receive and send packets from NIC, and distribute packets to lcores of ANS tcp stack by software RSS.

Source: https://github.com/ansyun/dpdk-ans.

NGINX vs DPDK NGINX – Performance testing

Test environment

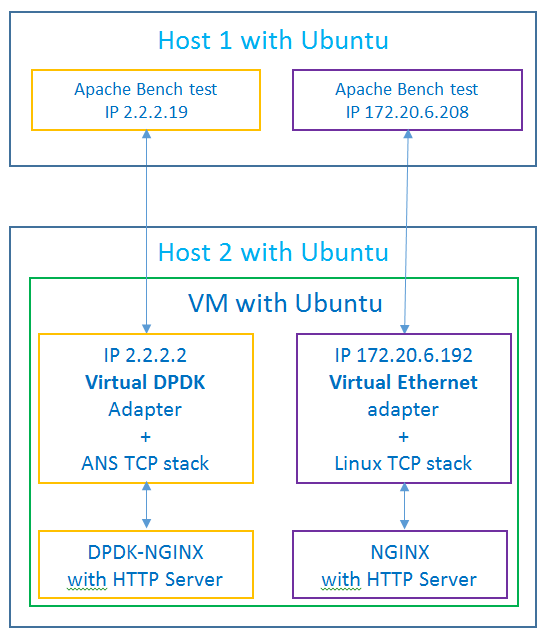

Now let’s move on to the most interesting part. As promised, I’m providing details of performance testing we conducted to demonstrate DPDK-NGINX benefits. This is a scheme of how our test environment looked like:  Host1 – Benchmark test setup

Host1 – Benchmark test setup

- OS Ubuntu 14.04 LTS

- 1 Ethernet NIC with Ethernet interface, IP 172.20.6.208 and alias IP 2.2.2.19

root@h163:~# ab -n 3000 -c 300 2.2.2.2:8000/

This is ApacheBench, Version 2.3 <$Revision: 1528965 $>

Copyright 1996 Adam Twiss, Zeus Technology Ltd, https://www.zeustech.net/

Licensed to The Apache Software Foundation, https://www.apache.org/

root@h163:~# ab -n 3000 -c 300 172.20.6.192:8000/

This is ApacheBench, Version 2.3 <$Revision: 1528965 $>

Copyright 1996 Adam Twiss, Zeus Technology Ltd, http://www.zeustech.net/

Licensed to The Apache Software Foundation, https://www.apache.org/

Host2 – DPDK-NGINX and NGINX test setup

- OS Ubuntu 14.04 LTS

- 1 Ethernet NIC with Ethernet interface, IP 172.20.6.205 and alias IP 2.2.2.20<\li>

- Virtual Machine (Virtual Box) with 2 virtual network adapters:

- Virtual network adapter with IP 172.20.6.192, used by classic Linux TCP stack

- Virtual network adapter with IP 2.2.2.2, used by DPDK and ANS TCP stack

- NGINX component with HTTP Server at 172.20.6.192:8000

- DPDK-NGINX component with HTTP Server at 2.2.2.2:8000

Performance testing results

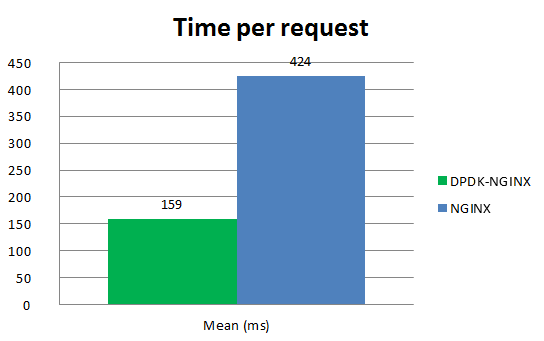

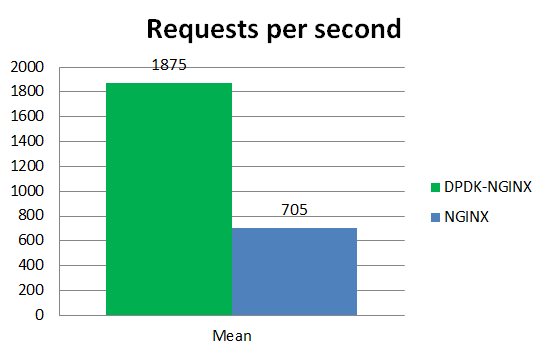

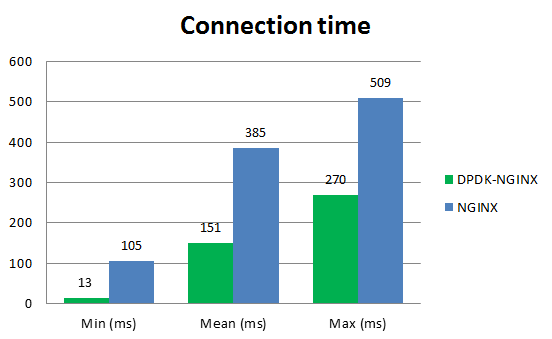

The same ApacheBench test was run on PC1 for both DPDK-NGINX and NGINX on the same hardware/software environment. The results are presented in the following table and charts:

| DPDK-NGINX | NGINX | |

| command line: | ab -n 3000 -c 300 http://2.2.2.2:8000/payload_pic.jpg | ab -n 3000 -c 300 http://172.20.6.192:8000/payload_pic.jpg |

| tcp stack: | dpdk-odp | standard Linux stack |

| Server Software: | dpdk-nginx/1.9.5 | nginx/1.9.13 |

| Server Hostname: | 2.2.2.2 | 172.20.6.192 |

| Server Port: | 8000 | 8000 |

| Document Path: | payload_pic.jpg | payload_pic.jpg |

| Document Length: | 13288 bytes | 13288 bytes |

| Concurrency Level: | 300 | 300 |

| Time taken for tests: | 1.600 seconds | 4.250 seconds |

| Complete requests: | 3000 | 3000 |

| Failed requests: | 0 | 0 |

| Total transferred: | 40572000 bytes | 40572000 bytes |

| HTML transferred: | 39864000 bytes | 39864000 bytes |

| Requests per second(mean): | 1875.45 [#/sec] | 705.94 [#/sec] |

| Time per request(mean): | 159.962 [ms] | 424.962 [ms] |

| Time per request(mean, across all concurrent requests): | 0.533 [ms] | 1.417 [ms] |

| Transfer rate: | 24769.07 [Kbytes/sec] | 9324.12 [Kbytes/sec] |

| Connection Times(min) ms: | 13 | 105 |

| Connection Times(mean) ms: | 151 | 385 |

| Connection Times(max) ms: | 270 | 509 |

Obviously, DPDK-NGINX shows almost 3 times better performance then NGINX even on virtual machine with virtual DPDK adapter, which is possible thanks to Accelerated Network Stack (ANS) with DPDK. Given such results in virtual environment, it is certain that on bare metal with real DPDK adapter DPDK-NGINX can show even higher performance.

Obviously, DPDK-NGINX shows almost 3 times better performance then NGINX even on virtual machine with virtual DPDK adapter, which is possible thanks to Accelerated Network Stack (ANS) with DPDK. Given such results in virtual environment, it is certain that on bare metal with real DPDK adapter DPDK-NGINX can show even higher performance.

What’s next?

According to official page for ANS, it is possible to improve ANS performance by following such recommendations:

- Isolate ANS’lcore from kernel by isolcpus and isolcate interrupt from ANS’s lcore by updating /proc/irq/default_smp_affinity file.

- Don’t run ANS on lcore0, since it will effect ANS performance.

Source: https://github.com/ansyun/dpdk-ans.

References

- Demo & Download: Open Source SDN Solution for Dynamic Topology Switchover - August 17, 2017

- DPDK-NGINX vs NGINX: Tech Overview and Performance Testing - June 8, 2016

- OpenDaylight-Based Traffic Steering for Web Server Load Balancing: PoC - April 19, 2016