If you’ve read the previous part of our Embedded story, you might have noticed that Embedded evolution in Networking and IoT domains went through similar stages. It all started off with manually operated systems, which were further replaced with mechanical solutions, and then were followed by specially designed chips which finally got a company of general-purpose CPUs (enter Embedded systems).

In the still earlier part of the Embedded saga, we concluded that Embedded software and hardware that we used to see 10 years ago are no longer with us, and overviewed some laws and principles that defined their evolution over time.

Today we are going to conclude our journey by further investigating the Embedded evolution in Cloud, Datacenters (DC), and Software Defined Networking (SDN). Also, we will witness the final Embedded transformation that marked the passing of old Embedded Systems and started a new era.

Cloud Systems: The software in heaven

We’ve stopped tracing the life of Embedded Systems at the point of the rise of software’s role in the system. I have previously mentioned that the old Embedded is dead because its old hardware was replaced with more powerful hardware, and its software became less intelligent. How exactly does this work?

A modern Embedded System consists of hardware components that are more powerful, but in general very similar to their predecessors. Their software, on the other hand, became more primitive, because we no longer need to keep all of it on an embedded device. Only the software elements directly related to specialized hardware functions need to be locked inside, and the rest of “software brains” have leaked into Cloud.

Zooming in: Datacenters

A datacenter (DC) looks like a supermarket filled with tons of hardware components on the shelves, connected together and exposed to the outer world. This hardware puzzle is connected in a common way – just to enable availability and regular operations, but once you need to scale or optimize performance, this generic pattern doesn’t work quite as well. For instance, when two virtual machines sitting on the opposite shelves talk to each other, their conversation is carried out by a dozen of software and hardware components, each slowing down performance.

This is not a big deal for a simple website, but when you are deploying a large Kafka cluster for instance, you’ve got to be careful with workload placement. For example, “noisy neighbor” workloads and choked connections can significantly slow down data processing and cost you a lot of time and money.

Throwing in more compute power doesn’t always help. The right approach in this case is fine-tuning the datacenter infrastructure to reflect your application’s needs. This is what PLVision’s engineers have been doing for the past 5 years – exposing embedded hardware functionality via various APIs. This approach allows to use software for combining pieces of electronics in an efficient order, speeding up communications and computations.

Zooming out: Datacenters and Global Network

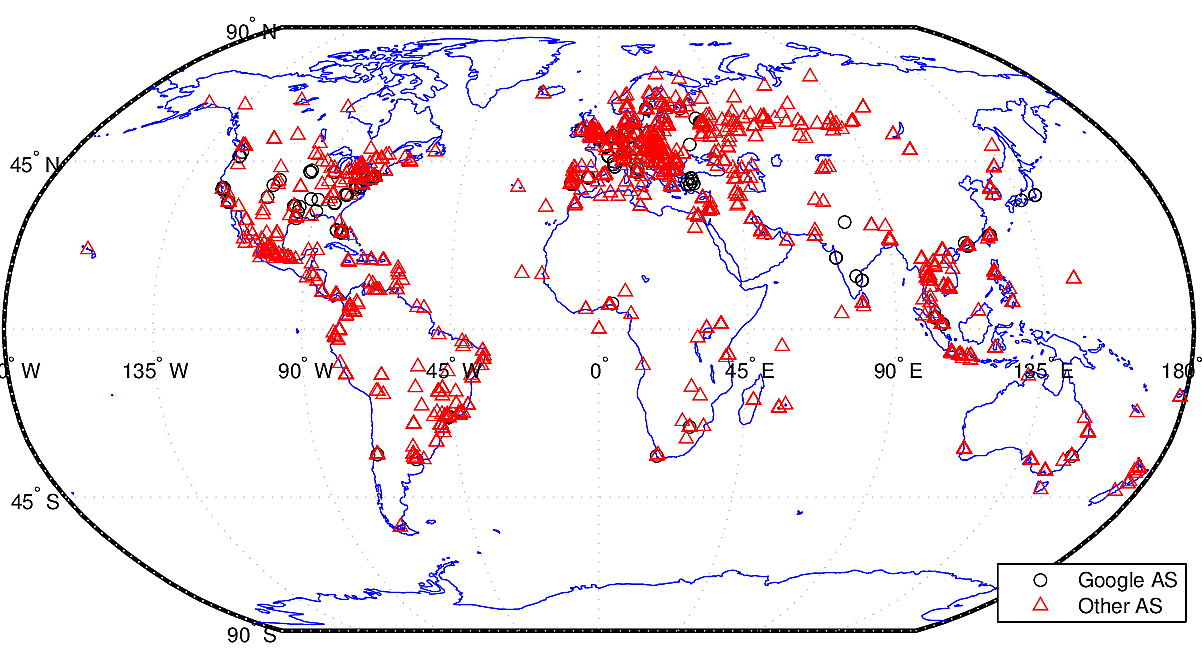

After a journey to a datacenter “supermarket”, let’s take a look at where those are located globally.

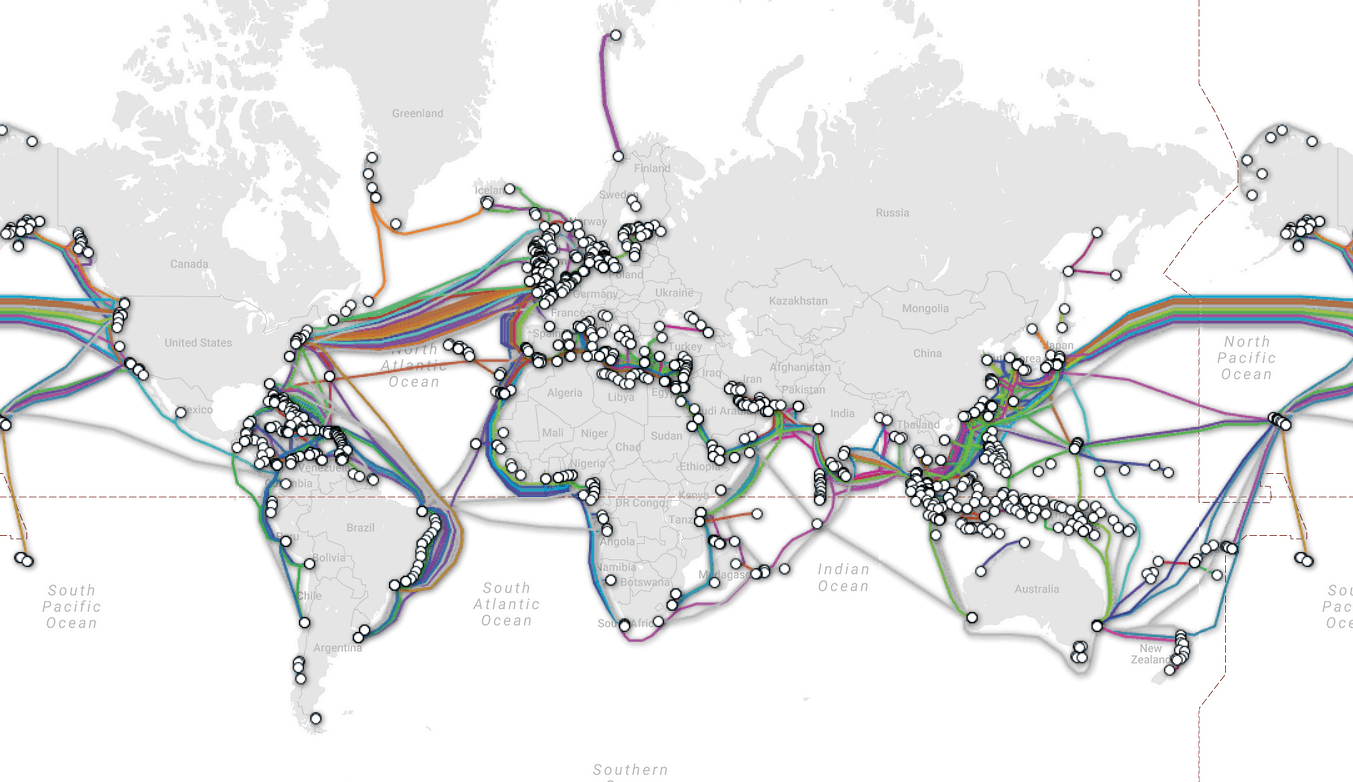

And now, let’s view a map of digital traffic highways – submarine cables currently carrying data between continents. This network is responsible for 99% of international traffic.

I’m hoping you are be able to spot that these two maps correlate considerably, with datacenters being placed close to submarine cable endpoints. Compared to a human body, it looks like the brain’s attachment to the spinal cord.

Carrier equipment vendors are now busy exposing APIs that used to be available for network engineers only. Pretty soon your applications will be able to allocate oversees speeds and bandwidth, and book “data transfer flights” for the next Friday.

The SDN and NVF world

Earlier I mentioned that the decision-making software has started leaking to the Cloud platforms, leaving only rudimentary pieces “locked” inside hardware. A similar “software leakage” is happening on the Networking domain. You may be familiar with it via two buzzwords: Software Defined Networking (SDN) and Network Function Virtualization (NFV).

SDN basically means that network devices are no longer responsible for deciding how network operates, leaving it to centralized software controllers instead. Look at it as a kind of political shift from democracy (network devices “voting” for traffic flow patterns) to dictatorship (a single controller telling the network what to do). And while this would hardly be a productive scheme in society, it works perfectly well in Networking – the control center has better visibility and more data to make an optimal decision.

NFV, on the other hand, uses virtual machines to run various software and processes. Virtualization was not born in the Networking field, but has been widely used in it since the benefits of virtual machines became obvious. If you can run a database or web server on a virtual machine, why don’t we put a switch or router there? Even easier with modern server CPUs, capable of processing 10 Gb traffic streams.

Today, you can deploy a switch, a firewall or a load balancer virtually in the cloud in minutes – no more screwdrivers and cables. Such network infrastructure can serve you as long as necessary, and can be painlessly removed when no longer needed. Recent research shows that the number of virtual network ports being created in the world annually is bigger than the number of physical ports deployed.

One large distributed computer system

Summing up all of the above, we can see that the world is becoming one large distributed computer system. There are peripherals and brain spread over long distances and areas. There is a nervous system connecting everything together and being constantly upgraded. Nowadays, anyone can build a giant virtual computer system using modern Networking technologies.

Yes, embedded systems still play their part in this huge infrastructure, but the decisive power has been taken away from them and transferred elsewhere, to software components located in the outer world. Software decides how to connect network elements and what to do with them, controls compute and storage power, connects everything together and gets a new ready-to-launch system ready in almost no time. There are fragments of embedded software DNA scattered inside this large infrastructure, but no one cares where they are as long as they work.

The real challenge arises when there is a need to scale the network and address high performance requirements. In this case, you need to look under the hood and ask your old and small embedded friends for help; but this is a matter of an entirely different story.

Based on Leonid’s speech at Lviv IT Arena 2017 conference.

- 10 Reasons NOT to Deploy

SONiC NOS in Your Network - July 12, 2024 - Choosing the Right SONiC Version for Your Network Infrastructure - May 15, 2024

- SONiC-DASH: Integration for a DPU in 4 Steps - January 23, 2023