Is Embedded really dead?

Yes.

Don’t worry, all your smartphones, tablets, TVs and dishwashers will keep working after you’ve read this (well, most probably).

An embedded system is a computer somehow implanted in a bigger mechanical or electrical system. It has a dedicated, specific function that enables autonomous operation of the larger system. For example, you can find embedded devices and embedded software in your washing machine, microwave oven, on a manufacturing plant floor or communications equipment in any modern office.

Embedded systems are dead in a historical sense – their software and hardware from 10 years ago are no longer with us. Somehow, they evaporated, probably passed away to a better world. In fact, they’ve been replaced by less intelligent software and more powerful hardware. Sounds like nonsense?

In order to understand this, we must consider several historical constraints of embedded computing:

- Small footprint

- Limited resources

- High reliability & timing constraints

- Autonomy

They enforce how these systems are designed, programmed, built and operated. But what creates these constraints, and how they shaped the evolution of Embedded Systems over time?

Let’s step back in time to the beginnings of computers and software to understand what had happened to our good-old embedded friends.

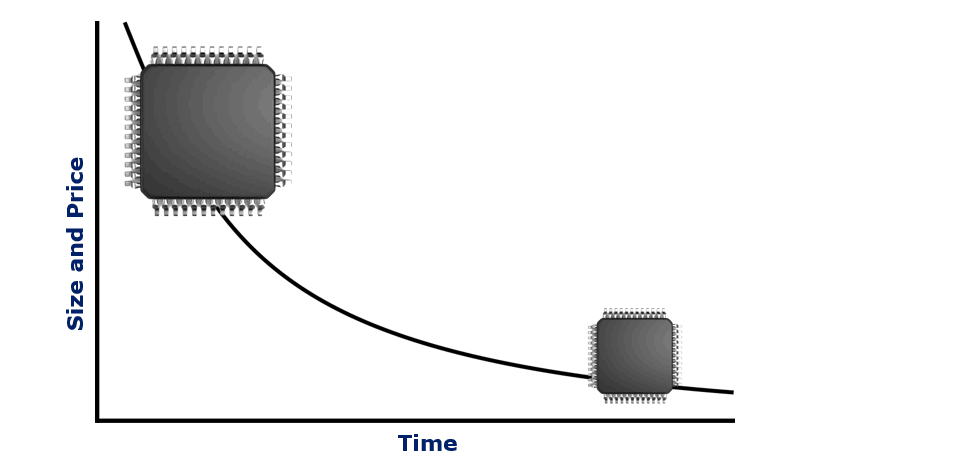

Moore’s law (CPU power)

A good friend of mine likes to say: “When I was small and computers were big …”. I just have to add they very slow and extremely expensive.

About 50 years ago Intel’s co-founder Gordon Moore predicted that computational power of semiconductor components of computers will double every two years. At the same time, computer chips will reduce in size and price by half within the same timeframe. In fact, this prediction came true so well that there are conspiracy theories postulating that it’s actually Intel’s business plan.

About 8 years ago PLVision was working on a set of protocols called Audio-Video Bridging. The main idea behind it is to use Ethernet and replace analogue connections in places like record studios, concert halls and broadcasting stations. Streams synchronization and bandwidth were among the key challenges. The protocols had to respond in milliseconds, and the device in use was quite limited in CPU horsepower and memory. It was a single core 400 Mz MIPS CPU with 128 Mb of RAM. Seems reasonable, until you try controlling several hundreds of media streams. Long story short, we spent months putting code together, counting each byte and millisecond available.

Several years had passed, we started working with switches and routers equipped like a high-end server, having multi core CPUs and gigabytes of memory. Therefore, things got a lot more relaxed on computing resources side. Now you can just take a core and dedicate it to a certain protocol or application. This makes a huge difference for time-critical applications running in parallel – with each one having its dedicated core, there are no more delays and asking “who jumped in and messed everything up”.

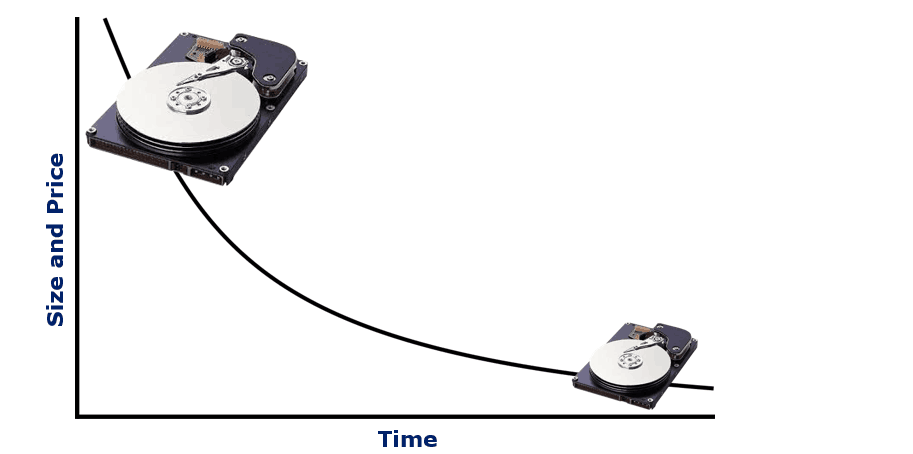

Kryder’s law (storage)

A prediction by Mark Kryder is related to the third pillar of any computer system – persistent storage. It states that data storage capacity of a hard drive of the same physical dimensions doubles every two years or so. Similarly to Moore’s Law, the cost of persistent megabyte reduces by half over the same period. Those two trends are very correlated, because the first reinforces the second.

Embedded systems were one of the first technologies to start active use of flash memory. Although it was expensive, it helped to address reliability requirements. About 10 years ago we hardly had 1 or 2 Mb of persistent storage. Quite a narrow space to put bootloader, operating system, applications and user data in it.

On a past project, we were required to switch to some kind of embedded Linux operating system from a commercial RTOS. We obtained source code, installed cross compilers and toolchains – everything needed for development environment. Kicked off the build process, and in several hours got an image to put in flash memory. The image was 6 Mb – three times the size of a flash drive we had – and that was just a generic Linux with basic filesystem, without any applications on top. Luckily, we’ve managed to attach a USB flash drive and boot from it. Still, in parallel we had to get rid of two thirds of the standard operating system.

Nowadays, with current price and data storage capacity, I doubt there are much worries about kernel or root filesystem size.

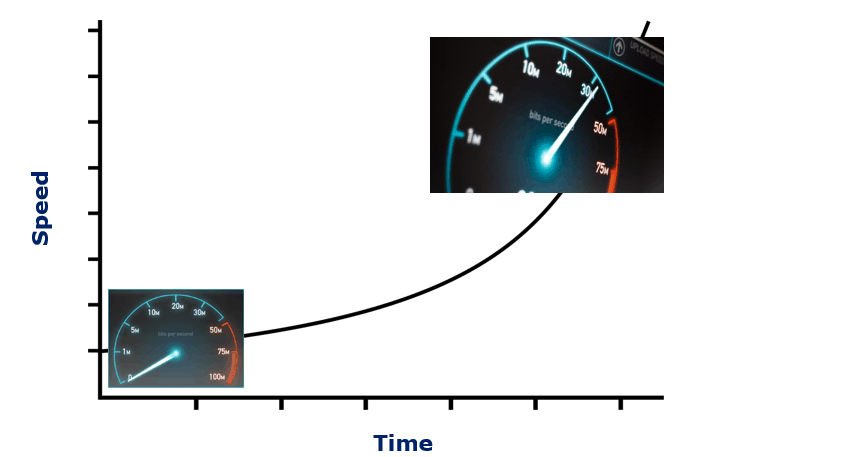

Keck’s law (fiber speed)

Now, from electronics world onto fiber optics domain. Most traffic these days is carried over tiny glass fibers. A certain Donald Keck invented glass material capable to transfer light over long distances without losses. He noticed that data rates over fiber follow the exponential growth pattern, like in Moore’s Law. In brief, the speed of network doubles each year.

The best illustration for it are ports of switches, routers and network interface cards. Over the past 10 years we moved from 100 Mb to 100 Gb interfaces.

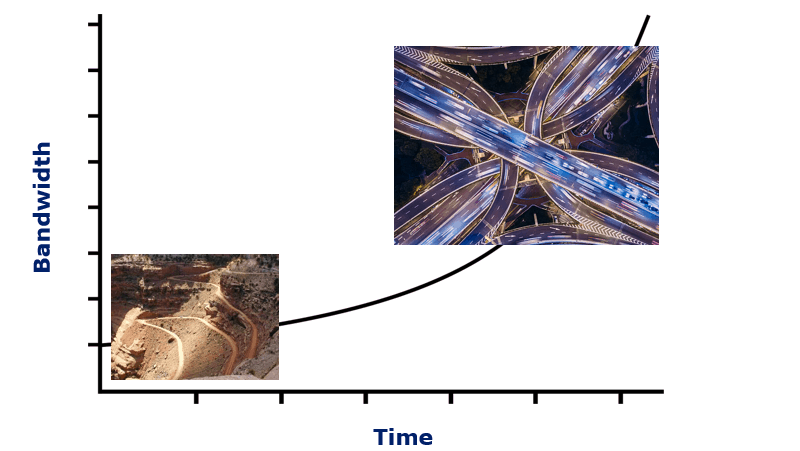

Nielsen’s law (user bandwidth)

Apart from speed increase, we are getting bandwidth improvements. End-user connection bandwidth grows by half annually, resulting in already familiar doubling over two years. It means that besides the speed of transmission we are getting wider channels to communicate. Comparing to a water supply system, not only water pressure increases, but the diameter of the pipes grows as well.

While Keck’s prediction mainly defines the progression of core backbone networks, Nilsen’s Law predicts improvement of edge networks closer to end-users.

Better performance, same price

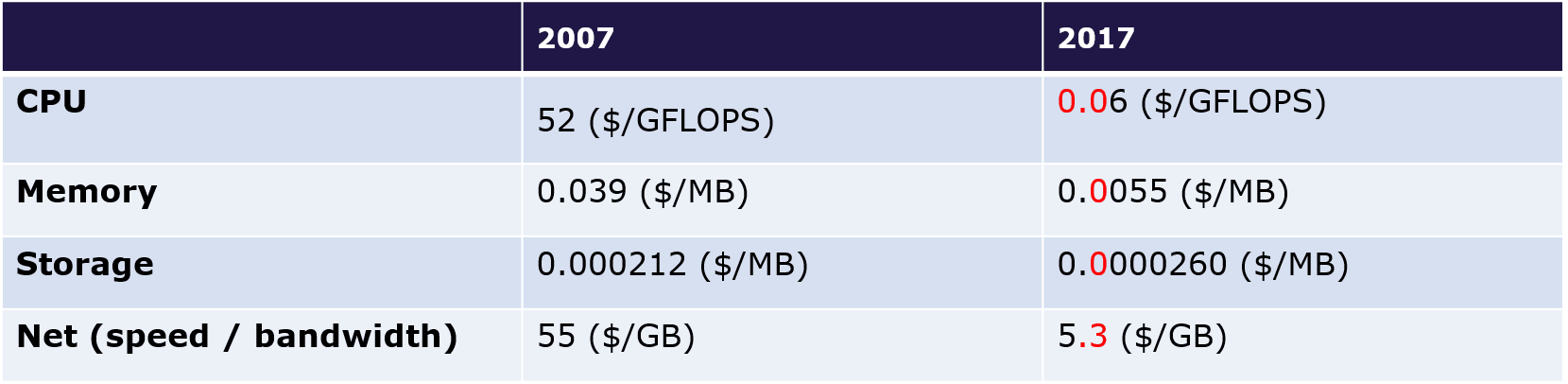

Summing up the words of prophets we talked about above, here is a comparison of price and capacity of computer components now and a decade ago. The logical question is: where are the effects of those laws? A good personal computer costs 1000 $ from year to year. My internet provider monthly bills are jumping by 25% each year. Where are the cost improvements promised?

Looks like this is the way computer manufacturers and service providers are doing business. You pay the same price over and over again, but you are getting more power and bandwidth. Don’t put too much attention into exact numbers – there are many different studies and approaches. They do show a fairly similar order of magnitude, although the end numbers may vary from source to source. You can observe the highlighted extra zeros, meaning a 10-fold increase in performance.

The leader in this race is surely CPU, being over-provisioned with computing power. It means it has enough resources for operating system overheads, various runtime environments, frameworks, orchestrators and so on.

What’s next?

We’ve had a swift flight over the history of some of the most defining predictions and laws that actually shaped the development of Embedded Systems as we know them today, and this concludes the first part of our exploration. Keeping that in mind, let’s brace ourselves for the next part, in which I will be talking about what actually happened to Embedded Systems from the very beginning till today, through the prism of several domains – Networking, Internet of Things, Cloud Systems, and SDN.

Based on Leonid’s speech at Lviv IT Arena 2017 conference.

- 10 Reasons NOT to Deploy

SONiC NOS in Your Network - July 12, 2024 - Choosing the Right SONiC Version for Your Network Infrastructure - May 15, 2024

- SONiC-DASH: Integration for a DPU in 4 Steps - January 23, 2023