Exploring OpenStack Neutron internal mechanics, namely – the functionality of Open vSwitch plugin.

I’ve been waiting for a chance to get my hands on OpenStack for a while now. Being one of the most advanced free open-source platforms for cloud computing systems, I’d say it is definitely going to influence the evolution of networking technologies, at least in datacenter (cloud) areas. Looking from the perspective of the most recent networking trends, SDN (Software-Defined Networking) in particular, OpenStack has certainly provided a good solution to the north-bound interface problem. Since OpenStack clearly specifies the requirements to the networking infrastructure, most of the SDN/OpenFlow controllers have implemented an API for it.

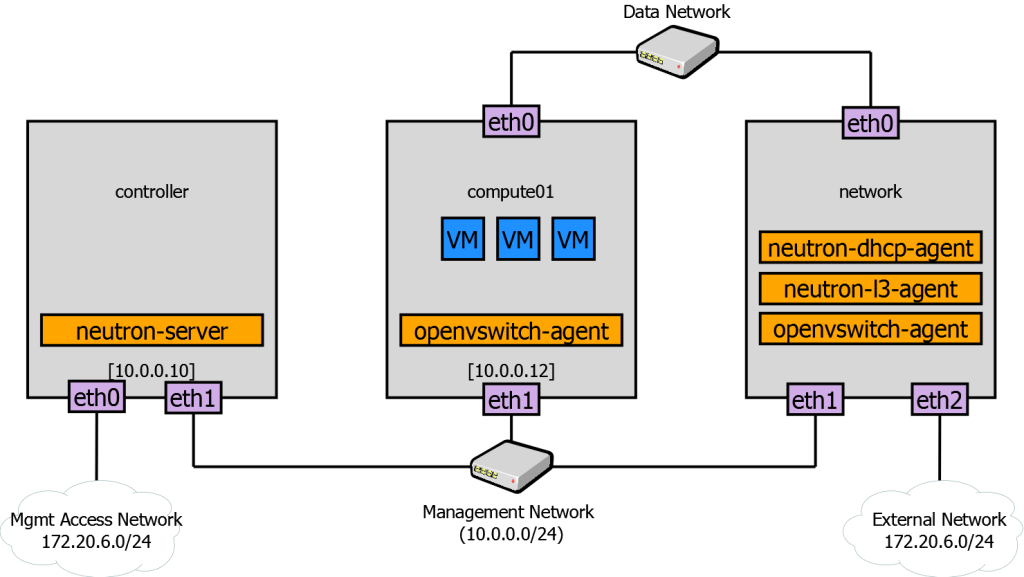

Deployment setup. Three nodes

For the purpose of experiment, I ran the basic three-node OpenStack setup. It has separate networks for access, management, and data traffic. Just follow the Installation Guide to have it up and running. From the networking point of view, network and compute nodes are the most interesting. The former runs l3-agent and dhcp-agent that provide connectivity to external network and IP address assignment via DHCP. The pluginopenvswitch-agent runs on both nodes and implements Neutron logical networks.

OpenStack and OVS Plugin Internals

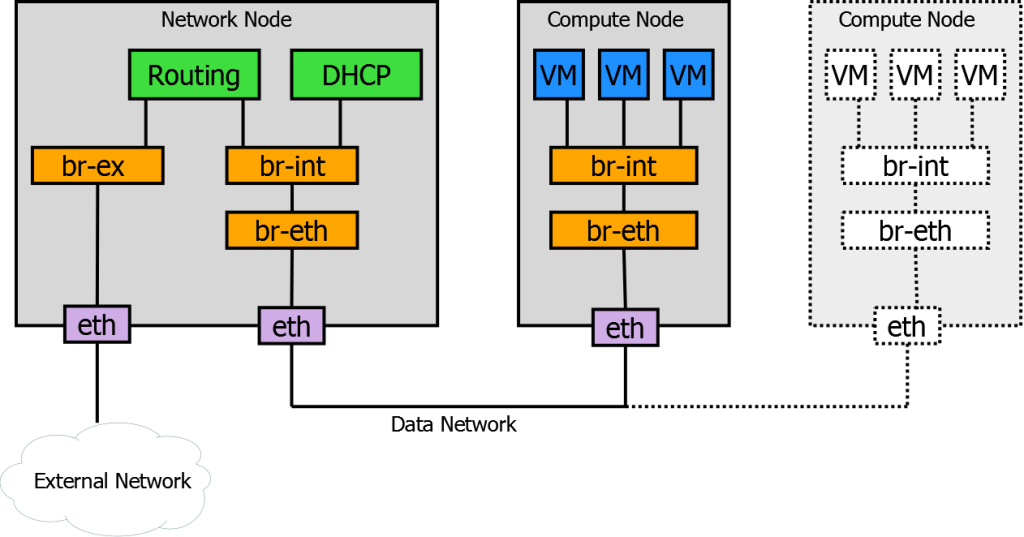

Let’s take a closer look at how OpenStack networking is organized. The key elements are OVS software switches which are used to build virtual networks on top of different physical environments. Note that in our case VLANs are used to isolate VM traffic on different Neutron networks.

There are three types of OVS instances, each having a different purpose:

br-int – the integration bridge which is provisioned on both compute and network nodes. It connects VM instances and supports networking services (like DHCP). It is actually used to implement Neutron networking model.

br-eth – physical connectivity bridge. Its main purpose is translating the virtual networks traffic into an isolated space of the underlying physical network. In our case, VLANs are used to separate Neutron networks, so br-eth actually tags traffic with a specific VLAN.

br-ex – bridge to external network. It establishes connectivity of the Neutron networks to the outer world.

On the top of that, there are Routing and DHCP functions implemented with regular Linux services and isolated in Linux network namespaces.

Let’s proceed to creating a network and starting two VMs to inspect the run-time network configuration.

Both VMs are running on the Compute node. OVS bridge br-int functions as a regular L2 switch, forwarding packets between VMs and to br-eth using flows with NORMAL action. Ports of br-int that are facing VMs are configured as “access ports”. They perform tagging with internal VLAN IDs. A port connected to br-eth is a “trunk port”; it participates in all VLANs. Ingress traffic from external network (br-eth) gets VLAN translation and is afterwards forwarded with NORMAL action:

compute01:~$ sudo ovs-vsctl show

...

Bridge br-int

Port "int-br-eth2"

Interface "int-br-eth2"

Port "tapb4583624-e3"

tag: 1

Interface "tapb4583624-e3"

Port "tapc8572667-96"

tag: 1

Interface "tapc8572667-96"

Port "tap3b5caddf-ae"

tag: 2

Interface "tap3b5caddf-ae"

compute01:~$ sudo ovs-ofctl show br-int | grep addr

...

1(int-br-eth2): addr:d2:b6:e0:a8:b6:23

2(tapb4583624-e3): addr:fe:16:3e:f5:18:41

3(tapc8572667-96): addr:fe:16:3e:b1:93:c1

4(tap3b5caddf-ae): addr:fe:16:3e:17:7c:c1

compute01:~$ sudo ovs-ofctl dump-flows br-int

...

cookie=0x0, duration=72586.295s, table=0, n_packets=667, n_bytes=97285, idle_age=7504, hard_age=65534, priority=3,in_port=1,dl_vlan=101 actions=mod_vlan_vid:1,NORMAL

cookie=0x0, duration=2781.882s, table=0, n_packets=326, n_bytes=47890, idle_age=2733, priority=3,in_port=1,dl_vlan=102 actions=mod_vlan_vid:2,>,NORMAL

cookie=0x0, duration=76210.237s, table=0, n_packets=34, n_bytes=2336, idle_age=2908, hard_age=65534, priority=2,in_port=1 actions=drop

cookie=0x0, duration=76210.854s, table=0, n_packets=1372, n_bytes=136385, idle_age=2733, hard_age=65534, priority=1 actions=NORMAL

Meanwhile, the physical bridge (br-eth) translates internal (logical) VLANS into physical space pool (external VLANs) and egresses them to the network.

compute01: ~$ sudo ovs-vsctl show

...

Bridge "br-eth2"

Port "eth2"

Interface "eth2"

Port "phy-br-eth2"

Interface "phy-br-eth2"

compute01: ~$ sudo ovs-ofctl show br-eth2 | grep addr

1(phy-br-eth2): addr:aa:c4:98:e7:d5:ac

2(eth2): addr:00:0c:42:07:27:ad

compute01: ~$ sudo ovs-ofctl dump-flows br-eth2

NXST_FLOW reply (xid=0x4):

cookie=0x0, duration=4910.411s, table=0, n_packets=454, n_bytes=45179, idle_age=4861, priority=4,in_port=1,dl_vlan=2 actions=mod_vlan_vid:102,NORMAL

cookie=0x0, duration=74714.808s, table=0, n_packets=918, n_bytes=91362, idle_age=10550, hard_age=65534, priority=4,in_port=1,dl_vlan=1 actions=mod_vlan_vid:101,NORMAL

cookie=0x0, duration=78338.635s, table=0, n_packets=0, n_bytes=0, idle_age=65534, hard_age=65534, priority=2,in_port=1 actions=drop

cookie=0x0, duration=78339.183s, table=0, n_packets=1027, n_bytes=147539, idle_age=4861, hard_age=65534, priority=1 actions=NORMAL

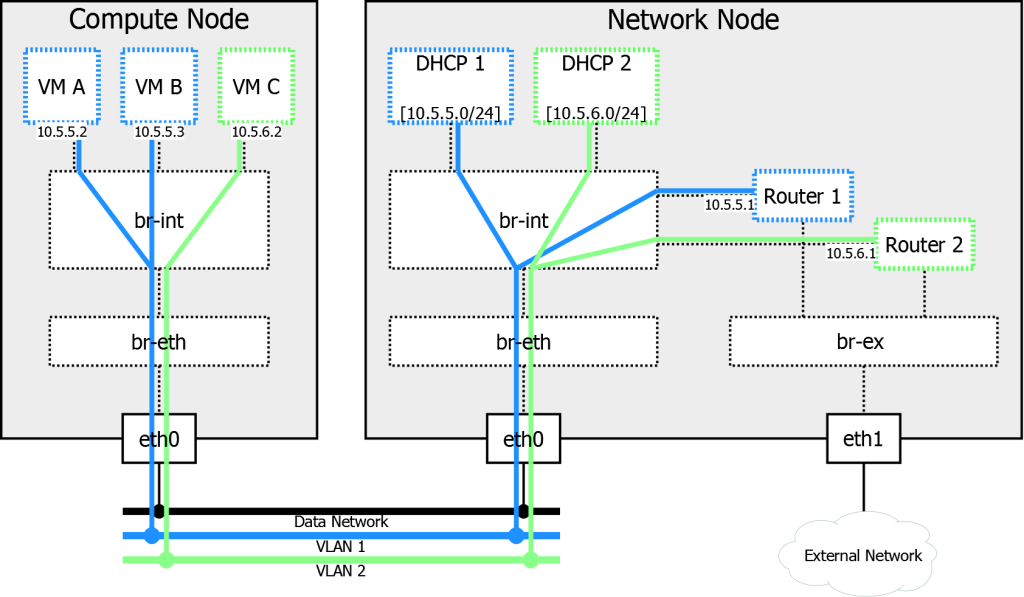

On network node we have very similar picture with br-eth and br-int. However interface configuration is a bit different.

network:~$ sudo ovs-vsctl show

...

Bridge br-int

Port "qr-e4c5ab67-c8"

tag: 2

Interface "qr-e4c5ab67-c8"

type: internal

Port "tap2059eced-e9"

tag: 2

Interface "tap2059eced-e9"

type: internal

Port "int-br-eth1"

Interface "int-br-eth1"

Port "tap9d14555d-a8"

tag: 1

Interface "tap9d14555d-a8"

type: internal

Port "qr-52a0ab1a-31"

tag: 1

Interface "qr-52a0ab1a-31"

type: internal

One of them – tapXXX – is created by neutron-dhcp-agent. It attaches br-int to network namespace, which runs dnsmasq in order to provide subnet 10.5.5.0/24 with IP address assignment via DHCP.

network:~$ ip netns | grep dhcp

qdhcp-08173eaa-6fff-450c-be09-6f60e66b2a73

qdhcp-8b1f65db-30d9-4852-b0ea-624506551dc3

network:~$ sudo ip netns exec qdhcp-8b1f65db-30d9-4852-b0ea-624506551dc3 >,netstat -pan

...

udp 0 0 0.0.0.0:67 0.0.0.0:* 6077/dnsmasq

network:~$ cat /var/lib/neutron/dhcp/8b1f65db-30d9-4852-b0ea-624506551dc3/host

fa:16:3e:3f:b8:b2,host-10-5-5-1.openstacklocal,10.5.5.1

fa:16:3e:f5:18:41,host-10-5-5-3.openstacklocal,10.5.5.3

fa:16:3e:b1:93:c1,host-10-5-5-4.openstacklocal,10.5.5.4

Another interesting interface is qr-XXX. It plays the role of an uplink to the external network. This interface connects br-int to another Linux network namespace that performs routing and NAT for this virtual network.

network: ~$ ip netns | grep qrouter

qrouter-a6dd3417-df21-433b-8857-827e895ea3c6

qrouter-3e810636-1f43-4d08-acc2-2edb25a10e0e

network: ~$ sudo ip netns exec qrouter-3e810636-1f43-4d08-acc2-2edb25a10e0e route -n

...

0.0.0.0 172.20.6.1 0.0.0.0 UG 0 0 0 qg-900380a6-98

10.5.5.0 0.0.0.0 255.255.255.0 U 0 0 0 qr-52a0ab1a-31

172.20.6.0 0.0.0.0 255.255.255.0 U 0 0 0 qg-900380a6-98

network: ~$ sudo ip netns exec qrouter-3e810636-1f43-4d08-acc2-2edb25a10e0e iptables-save

...

-A neutron-l3-agent-snat -j neutron-l3-agent-float-snat

-A neutron-l3-agent-snat -s 10.5.5.0/24 -j SNAT --to-source 172.20.6.31

-A neutron-postrouting-bottom -j neutron-l3-agent-snat

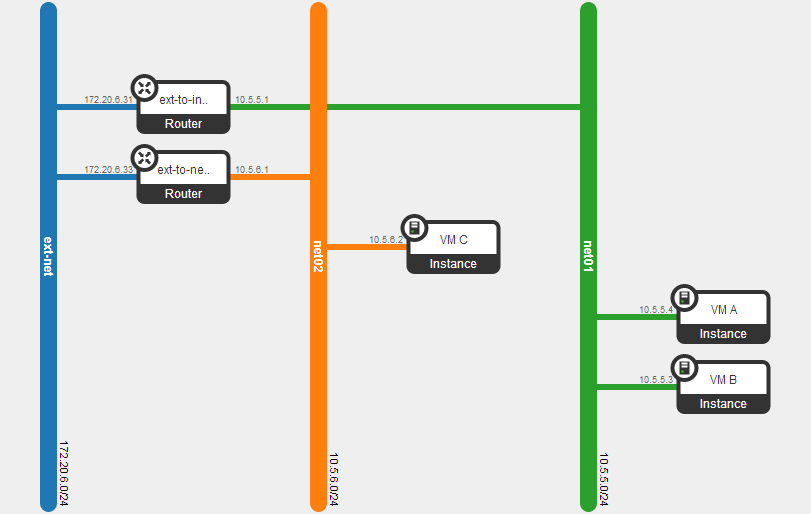

Now, let’s piece it all together. The diagram below shows how virtual topology is built using OVS’s, and then connected to an external network.

To sum up, OpenStack Neutron uses the overlay approach for connecting parts of a virtual network. Physical network in its turn provides transport and basic traffic isolation (VLANs in our case). Open vSwitches create a network virtualization layer on top of it, allowing dynamic creation of new virtual networks and modifications of the running ones, without any changes in underlying networking hardware.

The two OpenStack networking components in question got their place in ML2 framework, which will apparently be a major part of the next OpenStack release. Most importantly, the Neutron ML2 plugin separated physical network management in ML2 Type drivers, and network virtualization layer in ML2 Mechanism driver.

References

- 10 Reasons NOT to Deploy

SONiC NOS in Your Network - July 12, 2024 - Choosing the Right SONiC Version for Your Network Infrastructure - May 15, 2024

- SONiC-DASH: Integration for a DPU in 4 Steps - January 23, 2023