On April 17, OpenStack® issued its ninth release, code-named Icehouse™, which came synchronously with Ubuntu 14.04 LTS. Frankly speaking, it seems that the vast and monolithic Neutron plugins are now history. The Icehouse release is certain to foster the Modular Layer 2 (ML2) plugin usage, previously introduced in Havana release, which should eventually reduce the overall complexity of new L2 networking technologies, as well as significantly simplify their support.

ML2 Plugin Overview

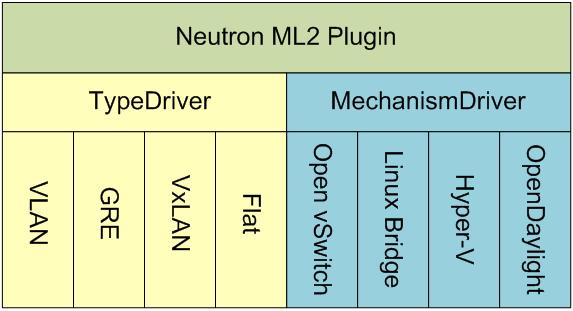

Basically, the ML2 plugin is a Neutron plugin that provides an interface for extensible sets of specific drivers of two types:

- Layer 2 network types drivers (AKA TypeDriver);

- Networking mehanisms (AKA MechanismDrivers) for connecting to networks of the aforementioned types.

In contrast to the monolithic Neutron plugins, multiple MechanismDrivers can be used concurrently and utilize existing L2 agents and/or interact with external devices or controllers. A MechanismDriver is called upon creation, update, and deletion of networks, sub-networks, or ports. For every event, there are two methods that get called – one during the database transaction, and another right after it. The device vendors can freely implement their own MechanismDrivers to provide vendor-specific hardware support in OpenStack. There are currently MechanismDrivers implementations for Open vSwitch, Linux Bridge, and Hyper-V. Each MechanismDriver uses resources/information provided by the selected TypeDriver.

As of now, the ML2 framework includes TypeDrivers for the following networks:

- local – provides connectivity between VMs and other devices running on the same node. Does not provide any connectivity between nodes;

- flat – provides connectivity between VMs and other devices using any IEEE 802.1D conformant physical network without the use of VLANs, tunneling, or other segmentation approaches. Only one Flat network can exist on each physical network;

- vlan – provides connectivity between VMs and other devices using any IEEE 802.1Q conformant physical network. The physical network becomes segmented via VLAN headers. Consequently, up to 4094 segments can exist on each physical network;

- gre and vxlan – provide connectivity between VMs and other devices via using tunnel endpoints for organizing networking segments.

Although the ML2 plugin was included into Havana release, the official Installation Guide for Ubuntu 12.04 (LTS) provides info on installation of monolithic OVS plugin only. The thing is that when you decide to move on to the more advanced ML2 plugin, it becomes apparent that it uses different database structure, and the easiest way to proceed is deleting any neutron resources (routers, subnets, networks, etc.) and simply creating a ML2 database from scratch.

Since these steps result in an empty Neutron database, the Icehouse release provides a as a part of live migration. It transfers the data from Open vSwitch or LinuxBridge plugin schema to the ML2 schema, thus preserving the provisioned resources.

I’d like to note that the Havana Installation Guide contains some confusing configuration inaccuracies. Thus, to avoid typical mistakes and save loads of time and nerve during the OpenStack configuration process, it’s advisable to look through user’ comments as well.

OpenDaylight Controller Support

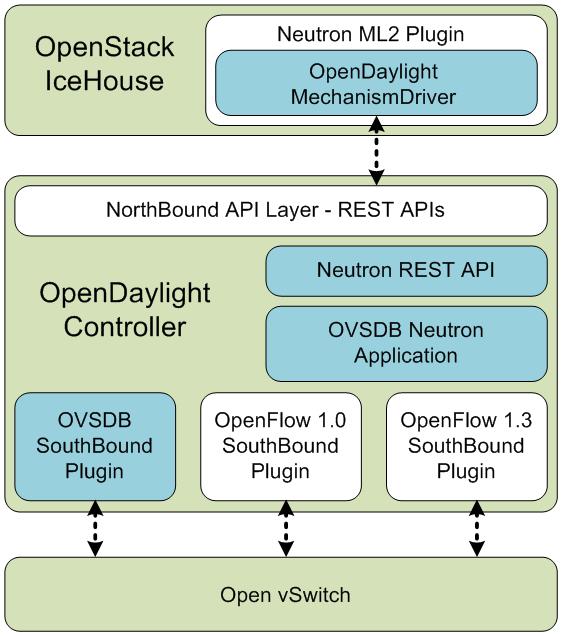

Icehouse has also introduced support of OpenDaylight SDN controller. It is implemented as a lightweight ML2 MechanismDriver, acting as a REST proxy that passes all Neutron API calls to OpenDaylight. Meanwhile, the major functionality resides inside OpenDaylight controller itself.

Instead of using specific L2 agents on each compute node, the single ODL controller directly configures Open vSwitches by means of OVSDB Plugin.

As I have mentioned in my OpenDaylight Clustering Made Easier with OVSDB blog post, the OVSDB protocol uses JSON-RPC calls through active or passive client connection to manipulate a physical of virtual switch that has OVSDB attached to it. The connection mode must be set up manually using ovs-vsctl tool on networking and compute nodes.

The Devstack tool – shell script intended for building a complete OpenStack development environment – provides support for running OpenDaylight as an OpenStack Neutron plugin to manage Open vSwitches over the active client connection. Though I suppose passive connection mode should work as well.

The OpenDaylight’s OVSDB sub-system creates per-tenant networks using GRE or VXLAN tunnels based on information provided by OpenStack Neutron.

The way I see it, the next step toward improving OpenStack networking reliability is ensuring Open vSwitch proper behavior in case of OpenDaylight controller connection failure or even controller crash. It seems that ODL Clustering fits perfectly for this purpose.

- SAI Challenger: The SONiC-Based Framework for SAI Testing and Integration - February 20, 2021

- P4 for OVS with Zero Changes - April 11, 2019

- SDN Controllers Overview - August 28, 2014