Exploring the possibilities of one of the most promising SDN solutions – the OpenDaylight Controller.

Less than a month ago the OpenDaylight (ODL) project made its first code release – Hydrogen. Since we’ve been exploring OpenDaylight for a while now, we couldn’t ignore this event and started studying the possibilities of Hydrogen and putting them into practice.

One of the OpenDaylight features that we’re particularly interested in is clustering. Or rather it’s not a feature, as duly noted in ODL clustering wiki, but “…part of the [ODL] Controller Infrastructure” that allows creating clusters of multiple ODL controllers.

A part of our research was concerned about the issues of switch – cluster communication in conditions when new controller instances are being started within the Cluster. Switches need to be able to recognize these instances and communicate with them properly. The OVSDB protocol that is comprised in Open vSwitch allows solving these issues via providing the switch database server with configuration data – and that’s basically what we’re going to talk about.

Some preliminary info

First of all, follow the ODL wiki instructions to startup the ODL Cluster.

ODL GUI has a Clustering option that allows viewing the status of the Cluster. You can find it under the options menu (top right side of the screen):

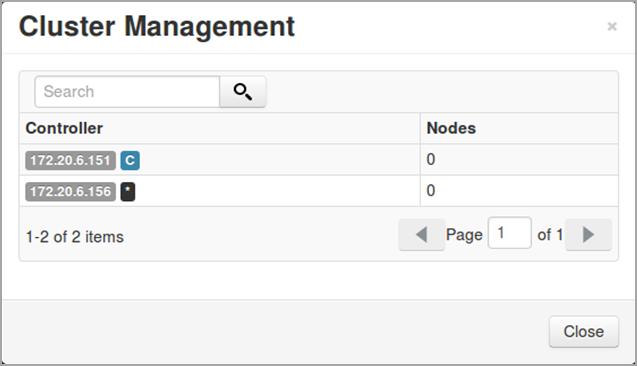

The Cluster Management window looks as follows:

Here you can see the addresses of hosts with running OpenDaylight Controllers that constitute the OpenDaylight Cluster.

The list of Cluster nodes can be retrieved from OpenDaylight’s OSGI CLI as well:

getClusterNodes

172.20.6.151

172.20.6.156

Also, the OpenDaylight’s OSGI CLI provides the following commands for setting/retrieving clustering connection scheme and for retrieving the list of connected nodes:

---Connection Manager---

scheme [<name>] - Print / Set scheme

printNodes [<controller>] - Print connected nodes

The Clustering connection scheme is defined in opendaylight/configuration/config.ini file (ANY_CONTROLLER_ONE_MASTER by default). See the full list of connection schemes below. The latter 3 schemes have been included on experimental basis for future extensions.

/**

* All the nodes are connected with a Single Controller.

* The SingleControllerScheme algorithm will determine that one

* controller to which all the nodes are connected with.

* This is like Active-Standby model from a South-Bound perspective.

*/

SINGLE_CONTROLLER("All nodes connected with a Single Controller"),

/**

* Any node can be connected with any controller. But with just 1

* master controller.

*/

ANY_CONTROLLER_ONE_MASTER("Nodes can to connect with any controller in

the cluster"),

/**

* Simple Round Robin Scheme that will let the nodes connect

* with each controller in Active-Active cluster in a round robin fashion.

*/

ROUND_ROBIN("Each node is connected with individual Controller in

Round-Robin fashion"),

/**

* Complex Load Balancing scheme that will let the nodes connect

* with controller based on the resource usage in each

* of the controllers in a cluster.

*/

LOAD_BALANCED("Connect nodes to controllers based on the Controller

Load"),

/**

* Container based scheme will let the nodes connect with

* controller based on the container configuration.

*/

CONTAINER_BASED("Connect nodes to controllers based on Container they

belong to")

Presence of multiple controllers provides effective load balancing and improves reliability, as the switch can continue operating if one controller or controller connection fails. To do so, it is mandatory to configure switches with all the controllers to ensure that none of the switches will lose connectivity with the cluster.

During controller failover, one of the controllers becomes the master of each of the switches. The SINGLE_CONTROLLER ODL’s scheme chooses only one controller as a master for all the switches. In fact, this scheme addresses active/standby scenario only.

And now about the OVSDB

Open vSwitch can be configured to communicate with one or more external OpenFlow controllers. The IP address and TCP port of a target controller can be specified as follows:

ovs-vsctl set-controller <BRIDGE>

tcp:$CONTROLLER_ADDRESS:$CONTROLLER_PORT

In order to assign multiple controllers to the Open vSwitch, you just need to specify all necessary controller addresses and ports in the same command:

ovs-vsctl set-controller <BRIDGE>

tcp:$CONTROLLER_ADDRESS1:$CONTROLLER_PORT1 \

tcp:$CONTROLLER_ADDRESS2:$CONTROLLER_PORT2 \

tcp:$CONTROLLER_ADDRESS3:$CONTROLLER_PORT3 \

tcp:$CONTROLLER_ADDRESS4:$CONTROLLER_PORT4 ...

NOTE: Do not use separate commands for assigning multiple controllers, as the last command overwrites the previous controller configuration.

In order to connect Mininet to the OpenDaylight Cluster, first you need to start Mininet without a controller:

sudo mn –controller=none --switch=ovsk --topo tree,3

Then, use “ovs-vsctl set-controller” command to reconfigure all OvS bridges created by Mininet to connect to each OpenDaylight controller in the Cluster and thus address the switchover scenario.

The OvS connection status can be verified as follows:

sudo ovs-vsctl show

Bridge "s6"

Controller "tcp:172.20.6.156"

is_connected: true

Controller "tcp:172.20.6.151"

is_connected: true

fail_mode: secure

Port "s6-eth3"

Interface "s6-eth3"

Port "s6-eth2"

Interface "s6-eth2"

Port "s6"

Interface "s6"

type: internal

Port "s6-eth1"

Interface "s6-eth1"

ovs_version: "2.0.0"

Such a manual configuration approach becomes laborious with increasing number of switches in Mininet topology. Also, we must keep in mind that in case OpenDaylight Cluster has been extended with additional ODL Controllers, all switches have to be reconfigured to address the switchover scenario.

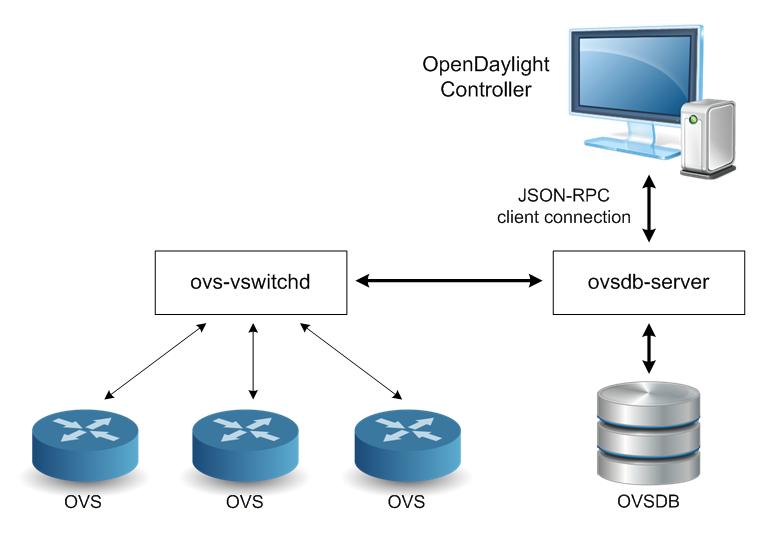

The aforementioned issues can be solved by means of OVSDB protocol. The OpenDaylight Controller comprises the OVSDB bundle that implements the Open vSwitch Database management protocol, enabling southbound configuration of vSwitches.

The OVSDB protocol uses JSON-RPC calls to manipulate a physical or virtual switch that has OVSDB attached to it. The RPC database interface is provided by ovsdb‑server application that supports JSON-RPC client connection over active or passive TCP/IP or Unix domain sockets. The ovs-vswitchd Open vSwitch daemon manages and controls any number of Open vSwitch switches on the local machine.

In the passive management mode, ODL Controller/Cluster initiates a connection to the OVS elements that are listening on a specified port. Then, through established JSON-RPC connection, vSwitches will get the list of all Controllers that constitute the Cluster.

In order to connect Mininet to ODL Cluster, start Mininet without a controller:

sudo mn –controller=none --switch=ovsk --topo tree,3

Then, configure OVSDB connection manager to listen on 6634 TCP port:

ovs-vsctl set-manager ptcp:6634

There are two options of starting JSON-RPC client connection between OpenDaylight Controller and OVSDB Server:

1. REST API:

/controller/nb/v2/connectionmanager/node/{connectionName}/address/{ipAddress}/port/{port}

2. OSGi CLI:

ovsconnect <connectionName> <ipAddress> <port>

NOTE: After ODL Cluster has been extended with additional ODL Controllers, the client connection request (REST or OSGi) must be rerun.

To sum up, I would like to point out that the main principle of SDN is management and data plane separation; and while the data plane configuration is fully defined by OpenFlow protocol, the management plane configuration still has gaps. It looks like OVSDB protocol is a good patch for this hole. In combination with OpenDaylight clustering, it enables robust update and propagation of changes in the management plane.

- SAI Challenger: The SONiC-Based Framework for SAI Testing and Integration - February 20, 2021

- P4 for OVS with Zero Changes - April 11, 2019

- SDN Controllers Overview - August 28, 2014